← return to practice.dsc10.com

Below are practice problems tagged for Lecture 9 (rendered directly from the original exam/quiz sources).

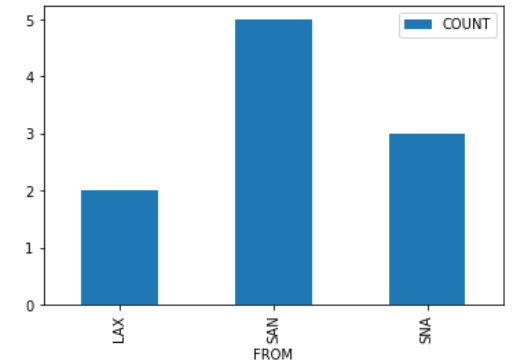

Suppose we create a DataFrame called socal containing

only King Triton’s flights departing from SAN, LAX, or SNA (John Wayne

Airport in Orange County). socal has 10 rows; the bar chart

below shows how many of these 10 flights departed from each airport.

Consider the DataFrame that results from merging socal

with itself, as follows:

double_merge = socal.merge(socal, left_on='FROM', right_on='FROM')How many rows does double_merge have?

Answer: 38

There are two flights from LAX. When we merge socal with

itself on the 'FROM' column, each of these flights gets

paired up with each of these flights, for a total of four rows in the

output. That is, the first flight from LAX gets paired with both the

first and second flights from LAX. Similarly, the second flight from LAX

gets paired with both the first and second flights from LAX.

Following this logic, each of the five flights from SAN gets paired with each of the five flights from SAN, for an additional 25 rows in the output. For SNA, there will be 9 rows in the output. The total is therefore 2^2 + 5^2 + 3^2 = 4 + 25 + 9 = 38 rows.

The average score on this problem was 27%.

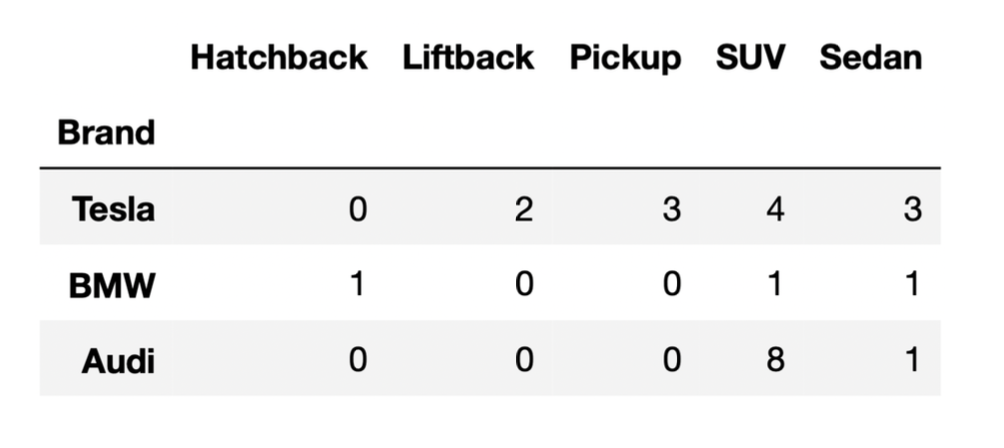

We also have a DataFrame that contains the distribution of

“BodyStyle” for all “Brands” in evs, other than Nissan.

Suppose we’ve run the following few lines of code.

tesla = evs[evs.get("Brand") == "Tesla"]

bmw = evs[evs.get("Brand") == "BMW"]

audi = evs[evs.get("Brand") == "Audi"]

combo = tesla.merge(bmw, on="BodyStyle").merge(audi, on="BodyStyle")How many rows does the DataFrame combo have?

21

24

35

65

72

96

Answer: 35

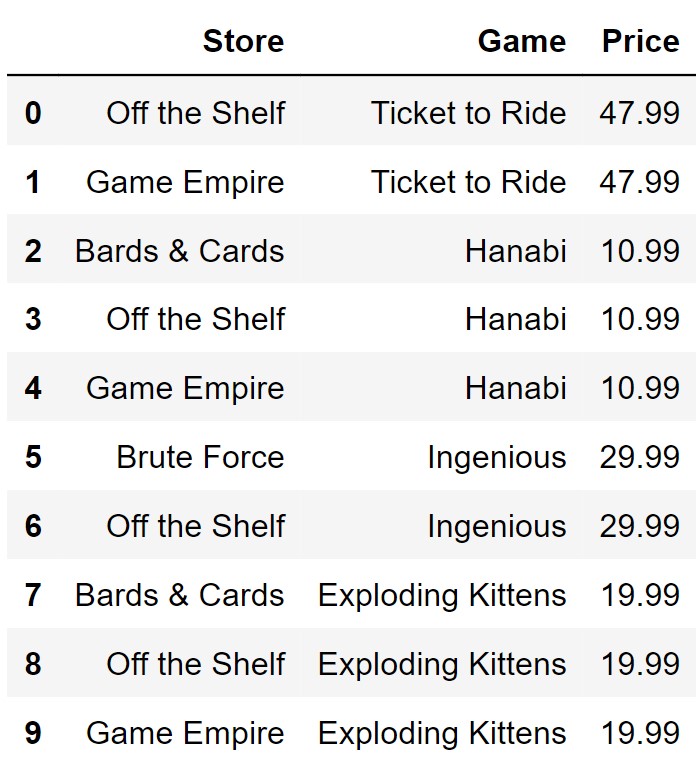

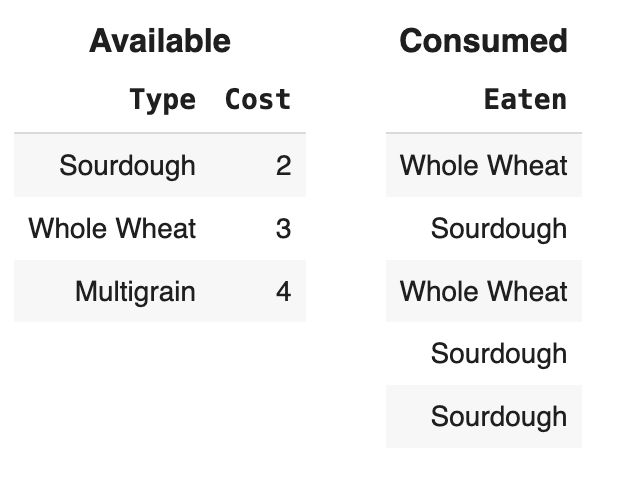

Let’s attempt this problem step-by-step. We’ll first determine the

number of rows in tesla.merge(bmw, on="BodyStyle"), and

then determine the number of rows in combo. For the

purposes of the solution, let’s use temp to refer to the

first merged DataFrame,

tesla.merge(bmw, on="BodyStyle").

Recall, when we merge two DataFrames, the resulting

DataFrame contains a single row for every match between the two columns,

and rows in either DataFrame without a match disappear. In this problem,

the column that we’re looking for matches in is

"BodyStyle".

To determine the number of rows of temp, we need to

determine which rows of tesla have a

"BodyStyle" that matches a row in bmw. From

the DataFrame provided, we can see that the only

"BodyStyle"s in both tesla and

bmw are SUV and sedan. When we merge tesla and

bmw on "BodyStyle":

tesla each match the 1 SUV row in

bmw. This will create 4 SUV rows in temp.tesla each match the 1 sedan row in

bmw. This will create 3 sedan rows in

temp.So, temp is a DataFrame with a total of 7 rows, with 4

rows for SUVs and 3 rows for sedans (in the "BodyStyle")

column. Now, when we merge temp and audi on

"BodyStyle":

temp each match the 8 SUV rows in

audi. This will create 4 \cdot 8

= 32 SUV rows in combo.temp each match the 1 sedan row in

audi. This will create 3 \cdot 1

= 3 sedan rows in combo.Thus, the total number of rows in combo is 32 + 3 = 35.

Note: You may notice that 35 is the result of multiplying the

"SUV" and "Sedan" columns in the DataFrame

provided, and adding up the results. This problem is similar to Problem 5 from the Fall 2021

Midterm).

The average score on this problem was 45%.

Below, we provide the same DataFrame as shown at the start of the previous problem, which contains the distribution of “BodyStyle” for all “Brands” in evs, other than Nissan.

Suppose we’ve run the following few lines of code.

tesla = evs[evs.get("Brand") == "Tesla"]

bmw = evs[evs.get("Brand") == "BMW"]

audi = evs[evs.get("Brand") == "Audi"]

combo = tesla.merge(bmw, on="BodyStyle").merge(audi, on="BodyStyle")How many rows does the DataFrame combo have?

21

24

35

65

72

96

Answer: 35

Let’s attempt this problem step-by-step. We’ll first determine the

number of rows in tesla.merge(bmw, on="BodyStyle"), and

then determine the number of rows in combo. For the

purposes of the solution, let’s use temp to refer to the

first merged DataFrame,

tesla.merge(bmw, on="BodyStyle").

Recall, when we merge two DataFrames, the resulting

DataFrame contains a single row for every match between the two columns,

and rows in either DataFrame without a match disappear. In this problem,

the column that we’re looking for matches in is

"BodyStyle".

To determine the number of rows of temp, we need to

determine which rows of tesla have a

"BodyStyle" that matches a row in bmw. From

the DataFrame provided, we can see that the only

"BodyStyle"s in both tesla and

bmw are SUV and sedan. When we merge tesla and

bmw on "BodyStyle":

tesla each match the 1 SUV row in

bmw. This will create 4 SUV rows in temp.tesla each match the 1 sedan row in

bmw. This will create 3 sedan rows in

temp.So, temp is a DataFrame with a total of 7 rows, with 4

rows for SUVs and 3 rows for sedans (in the "BodyStyle")

column. Now, when we merge temp and audi on

"BodyStyle":

temp each match the 8 SUV rows in

audi. This will create 4 \cdot 8

= 32 SUV rows in combo.temp each match the 1 sedan row in

audi. This will create 3 \cdot 1

= 3 sedan rows in combo.Thus, the total number of rows in combo is 32 + 3 = 35.

Note: You may notice that 35 is the result of multiplying the

"SUV" and "Sedan" columns in the DataFrame

provided, and adding up the results. This problem is similar to Problem 5 from the Fall 2021

Midterm).

The average score on this problem was 45%.

Consider the DataFrame combo, defined below.

combo = txn.groupby(["is_fraud", "method", "card"]).mean()What is the maximum possible value of combo.shape[0]?

Give your answer as an integer.

Answer: 16

combo.shape[0] will give us the number of rows of the

combo DataFrame. Since we’re grouping by

"is_fraud", "method", and "card",

we will have one row for each unique combination of values in these

columns. There are 2 possible values for "is_fraud", 2

possible values for "method", and 2 possible values for

"card", so the total number of possibilities is 2 * 2 * 4 =

16. This is the maximum number possible because 16 combinations of

"is_fraud", "method", and "card"

are possible, but they may not all exist in the data.

The average score on this problem was 75%.

What is the value of combo.shape[1]?

1

2

3

4

5

6

Answer: 2

combo.shape[1] will give us the number of columns of the

DataFrame. In this case, we’re using .mean() as our

aggregation function, so the resulting DataFrame will only have columns

with numeric types (since BabyPandas automatically ignores columns which

have a data type incompatible with the aggregation function). In this

case, "amount" and "lifetime" are the only

numeric columns, so combo will have 2 columns.

The average score on this problem was 47%.

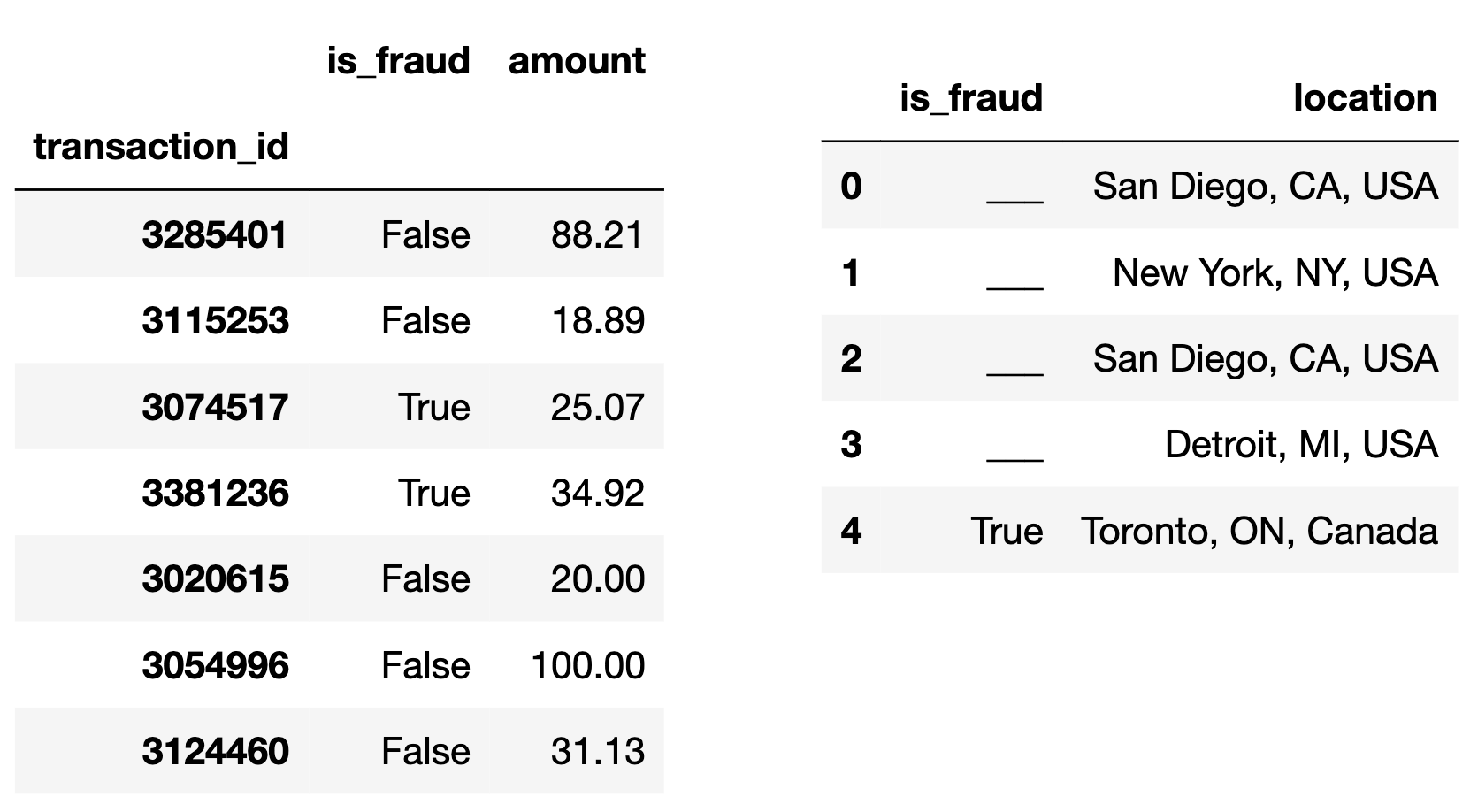

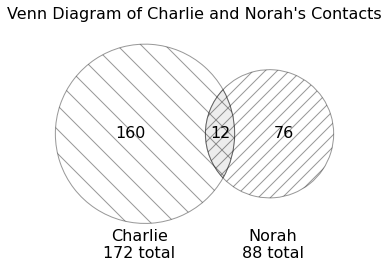

The DataFrame seven, shown below to the

left, consists of a simple random sample of 7 rows from

txn, with just the "is_fraud" and

"amount" columns selected.

The DataFrame locations, shown below to the

right, is missing some values in its

"is_fraud" column.

Fill in the blanks to complete the "is_fraud" column of

locations so that the DataFrame

seven.merge(locations, on="is_fraud") has

19 rows.

Answer A correct answer has one True

and three False rows.

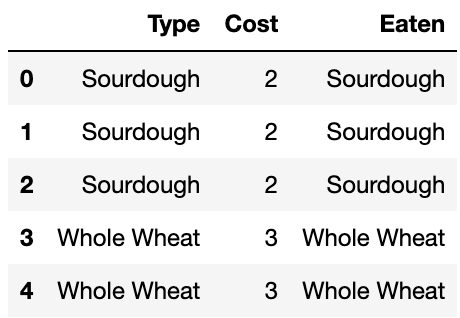

We’re merging on the "is_fraud" column, so we want to

look at which rows have which values for "is_fraud". There

are only two possible values (True and False),

and we see that there are two Trues and 5

Falses in seven. Now, think about what happens

“under the hood” for this merge, and how many rows are created when it

occurs. Python will match each True in seven

with each True in the "is_fraud" column of

location, and make a new row for each such pair. For

example, since Toronto’s row in location has a

True value in location, the merged DataFrame

will have one row where Toronto is matched with the transaction of

$34.92 and one where Toronto is matched with the transaction of $25.07.

More broadly, each True in locations creates 2

rows in the merged DataFrame, and each False in

locations creates 5 rows in the merged DataFrame. The

question now boils down to creating 19 by summing 2s and 5s. Notice that

19 = 3\cdot5+2\cdot2. This means we can

achieve the desired 19 rows by making sure the locations

DataFrame has three False rows and two True

rows. Since location already has one True, we

can fill in the remaining spots with three Falses and one

True. It doesn’t matter which rows we make

True and which ones we make False, since

either way the merge will produce the same number of rows for each (5

each for every False and 2 each for every

True).

The average score on this problem was 88%.

True or False: It is possible to fill in the four blanks in the

"is_fraud" column of locations so that the

DataFrame seven.merge(locations, on="is_fraud") has

14 rows.

True

False

Answer: False

As we discovered by solving problem 5.1, each False

value in locations gives rise to 5 rows of the merged

DataFrame, and each True value gives rise to 2 rows. This

means that the number of rows in the merged DataFrame will be m\cdot5 + n\cdot2, where m is the number of

Falses in location and n is the number of

Trues in location. Namely, m and n are

integers that add up to 5. There’s only a few possibilities so we can

try them all, and see that none add up 14:

0\cdot5 + 5\cdot2 = 10

1\cdot5 + 4\cdot2 = 13

2\cdot5 + 3\cdot2 = 16

3\cdot5 + 2\cdot2 = 19

4\cdot5 + 1\cdot2 = 22

The average score on this problem was 79%.

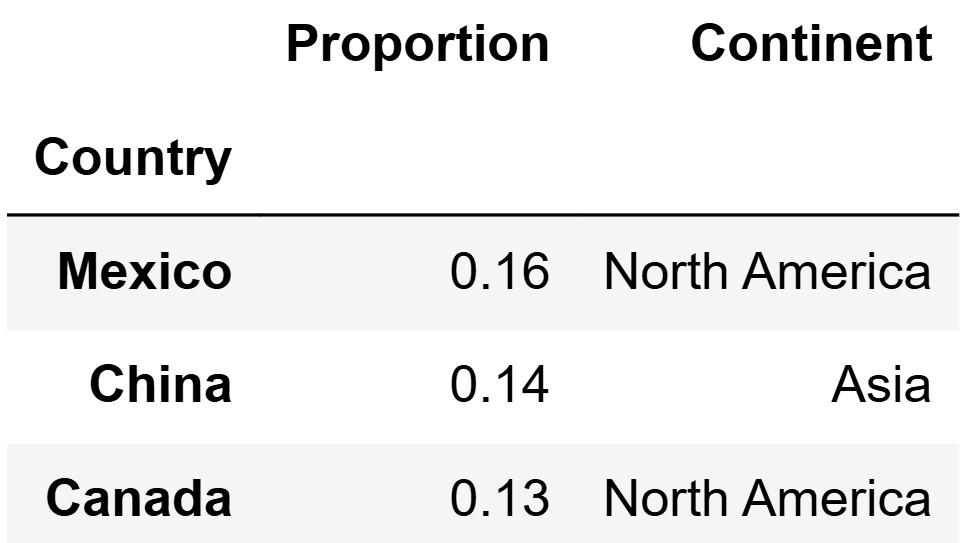

For those who plan on having children, an important consideration

when deciding whether to live in an area is the cost of raising children

in that area. The DataFrame expensive, defined below,

contains all of the rows in living_cost where the

"avg_childcare_cost" is at least $20,000.

expensive = living_cost[living_cost.get("avg_childcare_cost")

>= 20000]We’ll call a county an “expensive county" if there is at

least one "family_type" in that county with an

"avg_childcare_cost" of at least $20,000. Note that all

expensive counties appear in the expensive DataFrame, but

some may appear multiple times (if they have multiple

"family_type"s with an "avg_childcare_cost" of

at least $20,000).

Recall that the "is_metro" column contains Boolean

values indicating whether or not each county is part of a metropolitan

(urban) area. For all rows of living_cost (and, hence,

expensive) corresponding to the same geographic location,

the value of "is_metro" is the same. For instance, every

row corresponding to San Diego County has an "is_metro"

value of True.

Fill in the blanks below so that the result is a DataFrame indexed by

"state" where the "is_metro" column gives the

proportion of expensive counties in each state that are part of

a metropolitan area. For example, if New Jersey has five

expensive counties and four of them are metropolitan, the row

corresponding to a New Jersey should have a value of 0.8 in the

"is_metro" column.

(expensive.groupby(____(a)____).max()

.reset_index()

.groupby(____(b)____).____(c)____)What goes in blank (a)?

Answer: ["state", "county"] or

["county", "state"]

We are told that all expensive counties appear in the

expensive DataFrame, but some may appear multiple times,

for several different "family_type" values. The question we

want to answer, however, is about the proportion of expensive counties

in each state that are part of a metropolitan area, which has nothing to

do with "family_type". In other words, we don’t want or

need multiple rows corresponding to the same US county.

To keep just one row for each US county, we can group by both

"state" and "county" (in either order). Then

the resulting DataFrame will have one row for each unique combination of

"state" and "county", or one row for each US

county. Notice that the .max() aggregation method keeps the

last alphabetical value from the "is_metro" column in each

US county. If there are multiple rows in expensive

corresponding to the same US county, we are told that they will all have

the same value in the "is_metro" column, so taking the

maximum just takes any one of these values, which are all the same. We

could have just as easily taken the minimum.

Notice the presence of .reset_index() in the provided

code. That is a clue that we may need to group by multiple columns in

this problem!

The average score on this problem was 14%.

What goes in blank (b)?

Answer: "state"

Now that we have one row for each US county that is considered

expensive, we want to proceed by calculating the proportion of expensive

counties within each state that are in a metropolitan area. Our goal is

to organize the counties by state and create a DataFrame indexed only by

"state" so we want to group by "state" to

achieve this.

The average score on this problem was 68%.

What goes in blank (c)?

Answer: mean()

Recall that the "is_metro" column consists of Boolean

values, where True equals 1 and False equals

0. Notice that if we take the average of the "is_metro"

column for all the counties in a given state, we’ll be computing the sum

of these 0s and 1s (or the number of True values) divided

by the total number of expensive counties in that state. This gives the

proportion of expensive counties in the state that are in a metropolitan

area. Thus, when we group the expensive counties according to what state

they are in, we can use the .mean() aggregation method to

calculate the proportion of expensive counties in each state that are in

a metropolitan area.

The average score on this problem was 35%.

Recall that living_cost has 31430 rows, one for each of the ten possible

"family_type" values in each of the 3143 US counties.

Consider the function state_merge, defined below.

def state_merge(A, B):

state_A = living_cost[living_cost.get("state") == A]

state_B = living_cost[living_cost.get("state") == B]

return state_A.merge(state_B, on="family_type").shape[0]Suppose Montana ("MT") has 5 counties, and suppose

state_merge("MT", "NV") evaluates to 1050. How

many counties does Nevada ("NV") have? Give your answer as

an integer.

Answer 21

We are told Montana has 5 counties. We don’t know how many counties

Nevada has, but let’s call the number of counties in Nevada x and see how many rows the merged DataFrame

should have, in terms of x. If Montana

has 5 counties, since there are 10 "family_type" values per

county, this means the state_A DataFrame has 50 rows.

Similarly, if Nevada has x counties,

then state_B has 10x rows.

When we merge on "family_type", each of the 5 rows in

state_A with a given "family_type" (say

"2a3c") will match with each of the x rows in state_B with that same

"family_type". This will lead to 5x rows in the output corresponding to each

"family_type", and since there are 10 different values for

"family_type", this means the final output will have 50x rows.

We are told that the merged DataFrame has 1050 rows, so we can find x by solving 50x = 1050, which leads to x = 21.

The average score on this problem was 36%.

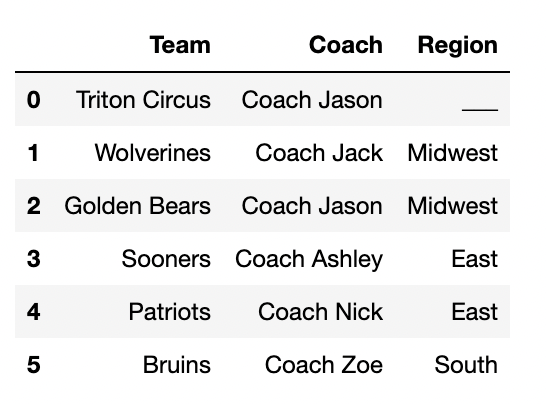

Select the correct way to fill in the blank such that the code below

evaluates to True.

treat.groupby(______).mean().shape[0] == treat.shape[0] "address"

"candy"

"neighborhood"

["address", "candy"]

["candy", "neighborhood"]

["address", "neighborhood"]

Answer: ["address", "candy"]

.shape returns a tuple containing the number of rows and

number of columns of a DataFrame respectively. By indexing

.shape[0] we get the number of rows. In the above question,

we are comparing whether the number of rows of treat

grouped by its column(s) is equal to the number of rows of the original

treat itself. This is only possible when there is a unique

row for each value in the column or for each combination of columns.

Since it is possible for an address to give out different types of

candy, values in "address" can show up multiple times.

Similarly, values in "candy" can also show up multiple

times since more than one house may give out a specific candy. A

neighborhood has multiple houses, so if a neighborhood has more than one

house, "neighborhood" will appear multiple times.

% write for combinations here % Each address gives out a specific

candy only once, and hence ["address", "candy"] would have

a unique row for each combination. This would make the number of rows in

the grouped DataFrame equal to treat itself. Multiple

neighborhoods might be giving out the same candy or a single

neighborhood could be giving out multiple candies, so

["candy", "neighborhood"] is not the answer. Finally, a

neighborhood can have multiple addresses, but each address could be

giving out more than one candy, which would mean this combination would

occur multiple times in treat, which means this would also

not be an answer. Since ["address", "candy"] is the only

combination that gives a unique row for each combination, the grouped

DataFrame would contain the same number of rows as treat

itself.

The average score on this problem was 69%.

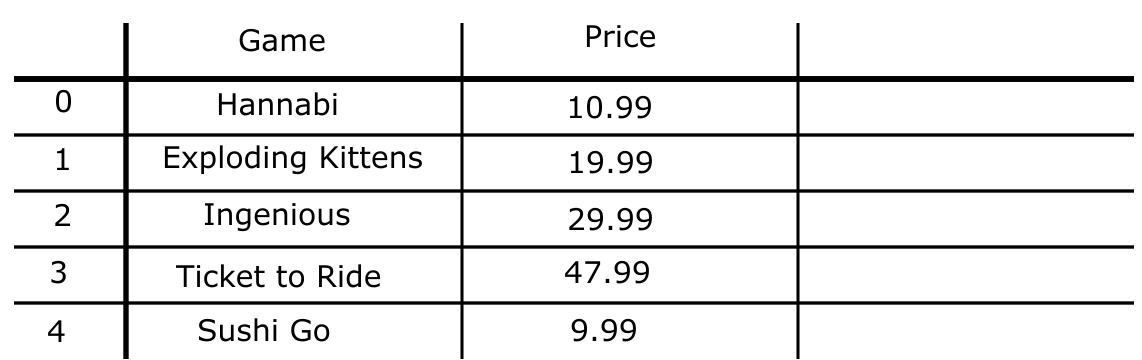

Assume that all houses in treat give out the same size

candy, say fun-sized. Suppose we have an additional DataFrame,

trick, which is indexed by "candy" and has one

column, "price", containing the cost in dollars of a

single piece of fun-sized candy, as a

float.

Suppose that:

treat has 200 rows total, and includes 15 distinct

types of candies.

trick has 25 rows total: 15 for the candies that

appear in treat, plus 10 additional rows that correspond to

candies not represented in treat.

Consider the following line of code:

trick_or_treat = trick.merge(treat, left_index = True, right_on = "candy")How many rows does trick_or_treat have?

15

25

200

215

225

3000

5000

Answer: 200

We are told that trick has 25 rows: 15 from candies that

are in treat and 10 additional candies. This means that

each candy in trick appears exactly once because 15+10= 25.

In addition, a general property when merging dataframes is that the

number of rows for one shared value between the dataframes is the

product of the number of occurences in either dataframe. For example, if

Twix occurs 5 times in treat, the number of times it occurs

in trick_or_treat is 5 * 1 = 5 (it occurs once in

trick). Using this logic, we can determine how many rows

are in trick_or_treat. Since each number of candies is

multipled by one and they sum up to 200, the number of rows will be

200.

The average score on this problem was 39%.

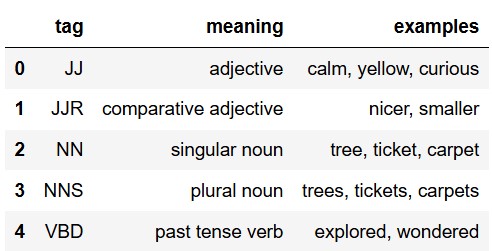

In the early 1990s, computational linguists developed the Penn

Treebank part of speech tagging system. In this system, there are 36 tags that represent different parts of

speech. The tags, their meanings, and a few examples are given in the

tags DataFrame, whose first five rows are shown here.

All of the values in the "ps" column of

words are tags from the Penn Treebank system. Remember,

we’re assuming that we’ve already applied the fix_ps

function from the previous problem.

We want to merge words with tags so that we

can see the meaning of each word’s part of speech tag more easily. Fill

in the blank in the code below to accomplish this.

Note that the index is lost when merging, so in order to keep

"word" as the index of our result, we have to use

reset_index() before merging, then set the index to

"word" again after merging.

merged = words.reset_index().merge(__(a)__).set_index("word")

merged(a): tags, left on="ps", right on="tag"

We want to merge the words dataframe with the tags dataframe, and we

want both to merge on the column with the tags in them. In the words df,

this is the "ps" column, and in the tags df this is the

"tag" column.

The average score on this problem was 75%.

Suppose words contains 5357 rows and tags contains

36 rows. How many rows does

merged contain? Give your answer as an integer or a

mathematical expression that evaluates to an integer.

Answer: 5357

When we merge words with tags, we know that

there are 36 unique rows in tags. This means that for every row in

words, there is only one row in tags that it

will be able to merge with in this new df. This means that every row in

words corresponds to only one row in , meaning that the new

merged df will have the same amount of rows as the words

df, or 5337.

The average score on this problem was 62%.

Suppose we have a function classify_difficulty that

takes as input the number of students that marked a word difficult, and

returns a difficulty rating of the word: "easy",

"moderate", or "hard". Define the DataFrame

grouped as follows. Note that this uses the

classify_length function from the previous problem.

grouped = (words.assign( diff_category =

words.get("diff").apply(classify_difficulty),

length_category =

words.get("length").apply(classify_length)

)

.groupby(["diff_category", "length_category"])

.count()

.reset_index()

)Suppose there is at least one word with every

possible pair of values for "diff_category" and

"length_category".

How many rows does grouped have? Give your answer as an

integer.

Answer: 9

The code groups the dataframe by two columns, so the number of rows

will be the number of combinations between the two rows. So the two

columns are ‘diff_category’: ("easy", "moderate", "hard") and

‘length_category’: ("short", "medium", "long"), and 3 columns x 3

columns = 9 columns.

The average score on this problem was 77%.

Fill in the blank to set num to the number of words

whose "diff_category" is "hard" and whose

"length_category" is "short". Recall that

.groupby arranges the rows of its output in alphabetical

order.

num = grouped.get("ps").iloc[__(a)__](a): 5

As stated in the last answer, the options for the two indicies are ’diff category’: (”easy”, ”moderate”, ”hard”) and ’length category’: (”short”, ”medium”, ”long”), with ’diff category’ coming first then ’length category’. We want to find the row that is “hard” and ”short”. If we sort each index alphabetically, we get (”easy”, ”hard”, ”moderate”) and (”medium”, ”long”, ”short”). Then, the dataframe looks like ”easy” difficulty for indices 0-2, ”hard” dif- ficulty for 3-5, and medium for 6-8, with each difficulty’s first row being ”medium”, second being ”long”, and last being ”short”. Since we want the hard, short word, we go hard → indices 3-5, then hard and short → index 5.

The average score on this problem was 52%.

We want to determine the proportion of "short" words

that are "hard". Fill in the blanks in the code below such

that prop evaluates to this proportion.

denom = grouped[__(b)__].get("freq").__(c)__

prop = num / denom(b):

grouped.get("length category") == short

We want the proportion of short words that are hard. Remember,

proportions are usually a part of the data divided by the whole sample

space. We already have the row that is the part of the data, which we

did in part b. Therefore, the denom should be the whole sample space,

which in this case is the total amount of short words. So, when we

query, we want only the short words, which we get by querying with

grouped.get("length category") == short.

(c): .sum()

Once we get the freq column, we’re left with one row

indexed by "short" with three subentries for each

difficulty, so we need to use.sum()

The average score on this problem was 38%.

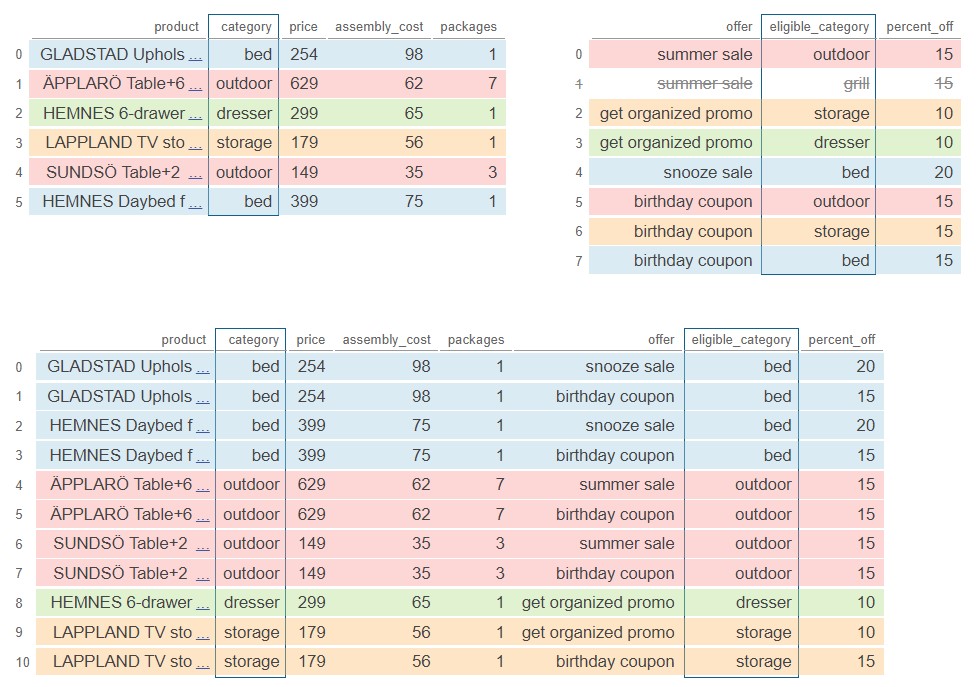

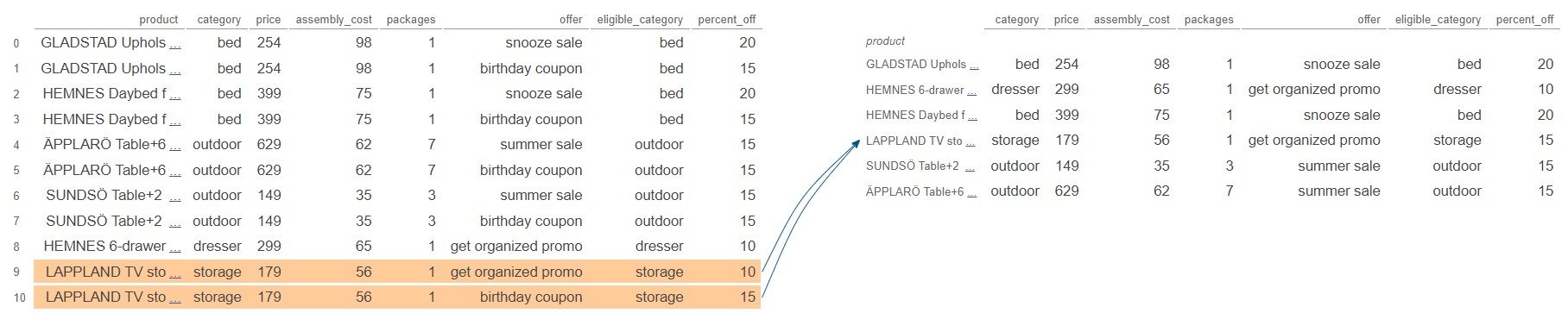

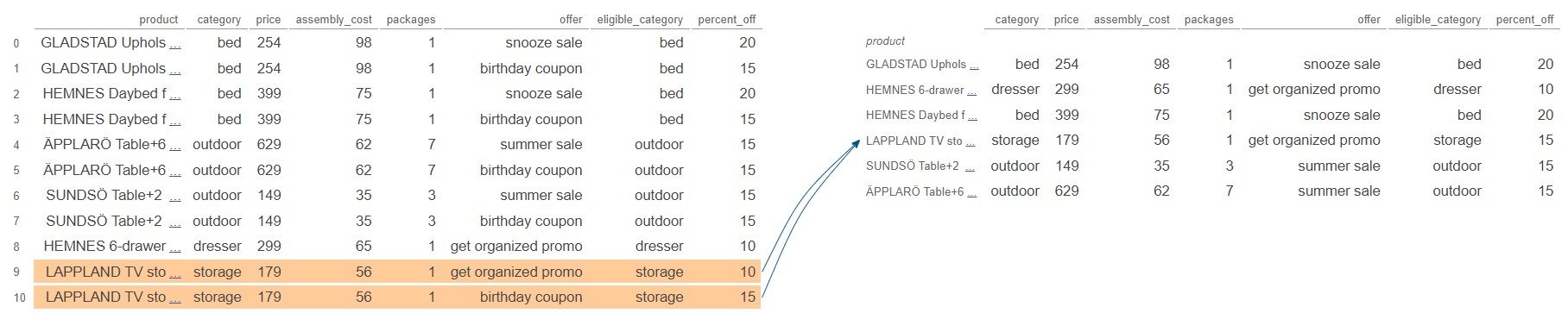

Recall that we have the complete set of currently available discounts

in the DataFrame offers.

The DataFrame with_offers is created as follows.

(with_offers = ikea.take(np.arange(6))

.merge(offers, left_on='category',

right_on='eligible_category'))How many rows does with_offers have?

Answer: 11

First, consider the DataFrame ikea.take(np.arange(6)),

which contains the first six rows of ikea. We know the

contents of these first six rows from the preview of the DataFrame at

the start of this exam. To merge with offers, we need to

look at the 'category' of each of these six rows and see

how many rows of offers have the same value in the

'eligible_category' column.

The first row of ikea.take(np.arange(6)) is a

'bed'. There are two 'bed' offers, so that

will create two rows in the output DataFrame. Similarly, the second row

of ikea.take(np.arange(6)) creates two rows in the output

DataFrame because there are two 'outdoor' offers. The third

row creates just one row in the output, since there is only one

'dresser' offer. Continuing row-by-row in this way, we can

sum the number of rows created to get: 2+2+1+2+2+2 = 11.

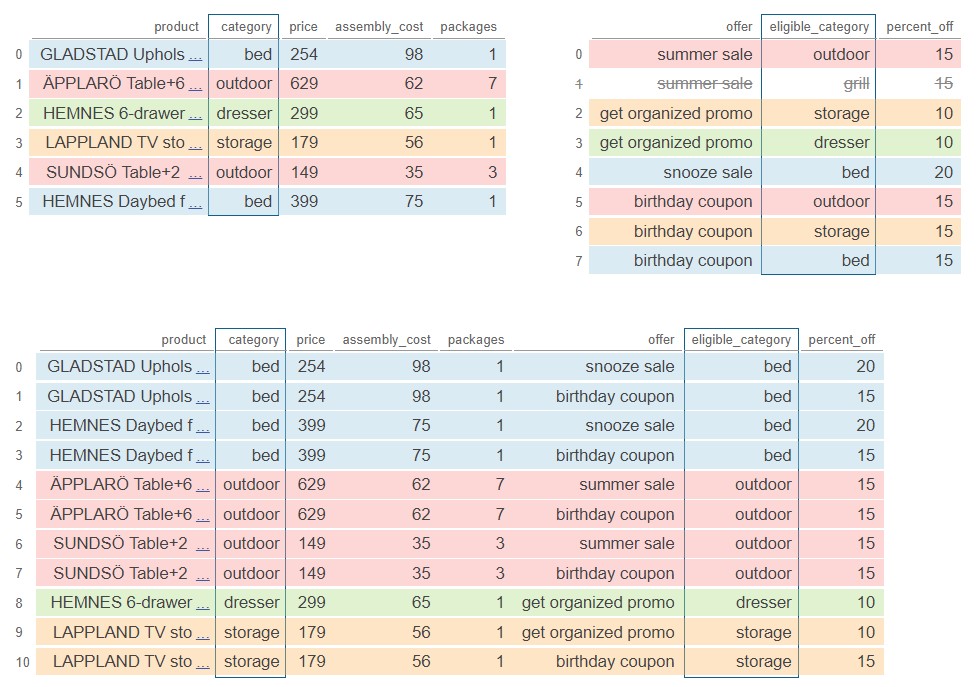

Pandas Tutor is a really helpful tool to visualize the merge process. Below is a color-coded visualization of this merge, generated by the code here.

The average score on this problem was 41%.

How many rows of with_offers have a value of 20 in the

'percent_off' column?

Answer: 2

There is just one offer with a value of 20 in the

'percent_off' column, and this corresponds to an offer on a

'bed'. Since there are two rows of

ikea.take(np.arange(6)) with a 'category' of

'bed', each of these will match with the 20 percent-off

offer, creating two rows of with_offers with a value of 20

in the 'percent_off' column.

The

visualization

from Pandas Tutor below confirms our answer. The two rows with a

value of 20 in the 'percent_off' column are both shown in

rows 0 and 2 of the output DataFrame.

The average score on this problem was 70%.

If you can use just one offer per product, you’d want to use the one that saves you the most money, which we’ll call the best offer.

True or False: The expression below evaluates to a

Series indexed by 'product' with the name of the best offer

for each product that appears in the with_offers

DataFrame.

with_offers.groupby('product').max().get('offer')True

False

Answer: False

Recall that groupby applies the aggregation function

separately to each column. Applying the .max() aggregate on

the 'offer' column for each group gives the name that is

latest in alphabetical order because it contains strings, whereas

applying the .max() aggregate on the

'percent_off' column gives the largest numerical value.

These don’t necessarily go together in with_offers.

In particular, the element of

with_offers.groupby('product').max().get('offer')

corresponding to the LAPPLAND TV storage unit will be

'get_organized_promo'. This happens because the two rows of

with_offers corresponding to the LAPPLAND TV storage unit

have values of 'get_organized_promo' and

'birthday coupon', but 'get_organized_promo'

is alphabetically later, so it’s considered the max by

.groupby. However, the 'birthday coupon' is

actually a better offer, since it’s 15 percent off, while the

'get_organized_promo' is only 10 percent off. The

expression does not actually find the best offer for each product, but

instead finds the latest alphabetical offer for each product.

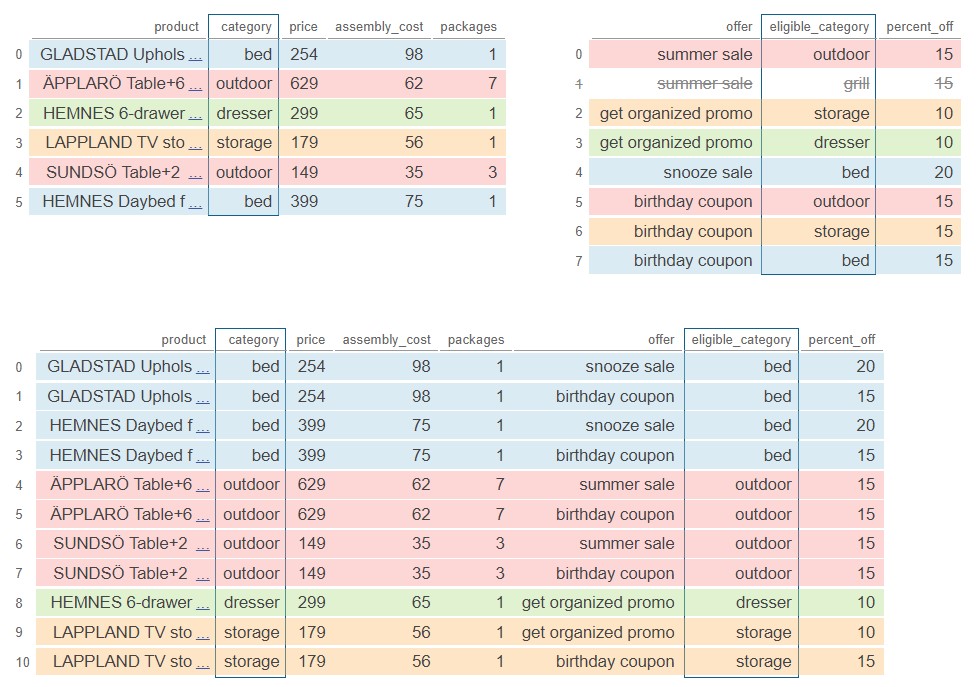

We can see this directly by looking at the output of Pandas Tutor below, generated by this code.

The average score on this problem was 69%.

You want to add a column to with_offers containing the

price after the offer discount is applied.

with_offers = with_offers.assign(after_price = _________)

with_offersWhich of the following could go in the blank? Select all that apply.

with_offers.get('price') - with_offers.get('percent_off')/100

with_offers.get('price')*(100 - with_offers.get('percent_off'))/100

with_offers.get('price') - with_offers.get('price')*with_offers.get('percent_off')/100

with_offers.get('price')*(100 - with_offers.get('percent_off')/100)

Answer:

with_offers.get('price')*(100 - with_offers.get('percent_off'))/100,

(with_offers.get('price') - with_offers.get('price')*with_offers.get('percent_off')/100)

Notice that all the answer choices use

with_offers.get('price'), which is a Series of prices, and

with_offers.get('percent_off'), which is a Series of

associated percentages off. Using Series arithmetic, which works

element-wise, the goal is to create a Series of prices after the

discount is applied.

For example, the first row of with_offers corresponds to

an item with a price of 254 dollars, and a discount of 20 percent off,

coming from the snooze sale. This means its price after the discount

should be 80 percent of the original value, which is 254*0.8 = 203.2 dollars.

Let’s go through each answer in order, working with this example.

The first answer choice takes the price and subtracts the percent off divided by 100. For our example, this would compute the discounted price as 254 - 20/100 = 253.8 dollars, which is incorrect.

The second answer choice multiplies the price by the quantity 100 minus the percent off, then divides by 100. This works because when we subtract the percent off from 100 and divide by 100, the result represents the proportion or fraction of the cost we must pay, and so multiplying by the price gives the price after the discount. For our example, this comes out to 254*(100-20)/100= 203.2 dollars, which is correct.

The third answer choice is also correct. This corresponds to taking the original price and subtracting from it the dollar amount off, which comes from multiplying the price by the percent off and dividing by 100. For our example, this would be computed as 254 - 254*20/100 = 203.2 dollars, which is correct.

The fourth answer multiplies the price by the quantity 100 minus the percent off divided by 100. For our example, this would compute the discounted price as 254*(100 - 20/100) = 25349.2 dollars, a number that’s nearly one hundred times the original price!

Therefore, only the second and third answer choices are correct.

The average score on this problem was 79%.

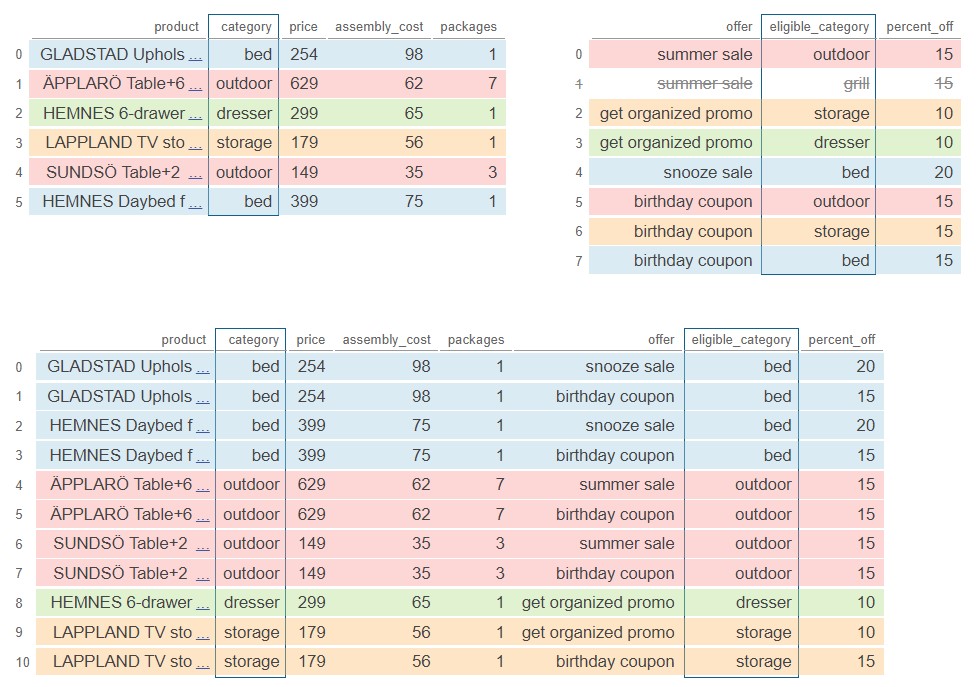

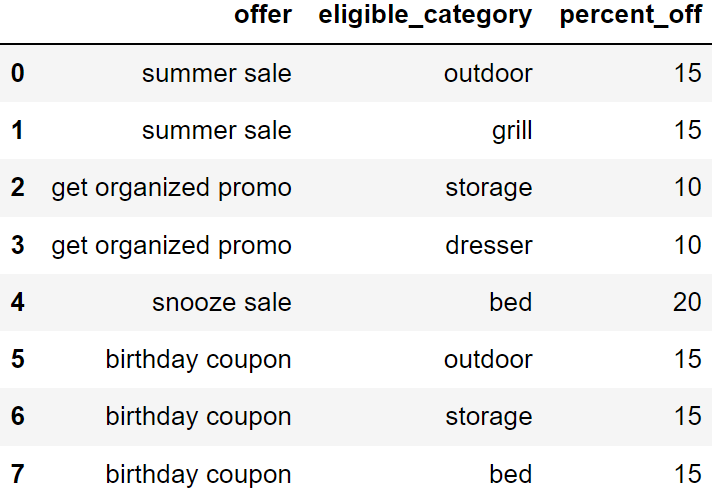

For each offer currently available, the DataFrame offers

has a separate row for each category of products to which the offer can

be applied. The columns are:

'offer' (str): the name of the offer'category' (str): the category to which

the offer applies'percent_off' (int): the percent discount

when applying this offer to this categoryThe full offers DataFrame is shown below. All rows are

pictured.

The DataFrame with_offers is created as follows.

(with_offers = ikea.take(np.arange(6))

.merge(offers, left_on='category',

right_on='eligible_category'))How many rows does with_offers have?

Answer: 11

First, consider the DataFrame ikea.take(np.arange(6)),

which contains the first six rows of ikea. We know the

contents of these first six rows from the preview of the DataFrame at

the start of this exam. To merge with offers, we need to

look at the 'category' of each of these six rows and see

how many rows of offers have the same value in the

'eligible_category' column.

The first row of ikea.take(np.arange(6)) is a

'bed'. There are two 'bed' offers, so that

will create two rows in the output DataFrame. Similarly, the second row

of ikea.take(np.arange(6)) creates two rows in the output

DataFrame because there are two 'outdoor' offers. The third

row creates just one row in the output, since there is only one

'dresser' offer. Continuing row-by-row in this way, we can

sum the number of rows created to get: 2+2+1+2+2+2 = 11.

Pandas Tutor is a really helpful tool to visualize the merge process. Below is a color-coded visualization of this merge, generated by the code here.

The average score on this problem was 41%.

How many rows of with_offers have a value of 20 in the

'percent_off' column?

Answer: 2

There is just one offer with a value of 20 in the

'percent_off' column, and this corresponds to an offer on a

'bed'. Since there are two rows of

ikea.take(np.arange(6)) with a 'category' of

'bed', each of these will match with the 20 percent-off

offer, creating two rows of with_offers with a value of 20

in the 'percent_off' column.

The

visualization

from Pandas Tutor below confirms our answer. The two rows with a

value of 20 in the 'percent_off' column are both shown in

rows 0 and 2 of the output DataFrame.

The average score on this problem was 70%.

If you can use just one offer per product, you’d want to use the one that saves you the most money, which we’ll call the best offer.

True or False: The expression below evaluates to a

Series indexed by 'product' with the name of the best offer

for each product that appears in the with_offers

DataFrame.

with_offers.groupby('product').max().get('offer')True

False

Answer: False

Recall that groupby applies the aggregation function

separately to each column. Applying the .max() aggregate on

the 'offer' column for each group gives the name that is

latest in alphabetical order because it contains strings, whereas

applying the .max() aggregate on the

'percent_off' column gives the largest numerical value.

These don’t necessarily go together in with_offers.

In particular, the element of

with_offers.groupby('product').max().get('offer')

corresponding to the LAPPLAND TV storage unit will be

'get_organized_promo'. This happens because the two rows of

with_offers corresponding to the LAPPLAND TV storage unit

have values of 'get_organized_promo' and

'birthday coupon', but 'get_organized_promo'

is alphabetically later, so it’s considered the max by

.groupby. However, the 'birthday coupon' is

actually a better offer, since it’s 15 percent off, while the

'get_organized_promo' is only 10 percent off. The

expression does not actually find the best offer for each product, but

instead finds the latest alphabetical offer for each product.

We can see this directly by looking at the output of Pandas Tutor below, generated by this code.

The average score on this problem was 69%.

You want to add a column to with_offers containing the

price after the offer discount is applied.

with_offers = with_offers.assign(after_price = _________)

with_offersWhich of the following could go in the blank? Select all that apply.

with_offers.get('price') - with_offers.get('percent_off')/100

with_offers.get('price')*(100 - with_offers.get('percent_off'))/100

with_offers.get('price') - with_offers.get('price')*with_offers.get('percent_off')/100

with_offers.get('price')*(100 - with_offers.get('percent_off')/100)

Answer:

with_offers.get('price')*(100 - with_offers.get('percent_off'))/100,

(with_offers.get('price') - with_offers.get('price')*with_offers.get('percent_off')/100)

Notice that all the answer choices use

with_offers.get('price'), which is a Series of prices, and

with_offers.get('percent_off'), which is a Series of

associated percentages off. Using Series arithmetic, which works

element-wise, the goal is to create a Series of prices after the

discount is applied.

For example, the first row of with_offers corresponds to

an item with a price of 254 dollars, and a discount of 20 percent off,

coming from the snooze sale. This means its price after the discount

should be 80 percent of the original value, which is 254*0.8 = 203.2 dollars.

Let’s go through each answer in order, working with this example.

The first answer choice takes the price and subtracts the percent off divided by 100. For our example, this would compute the discounted price as 254 - 20/100 = 253.8 dollars, which is incorrect.

The second answer choice multiplies the price by the quantity 100 minus the percent off, then divides by 100. This works because when we subtract the percent off from 100 and divide by 100, the result represents the proportion or fraction of the cost we must pay, and so multiplying by the price gives the price after the discount. For our example, this comes out to 254*(100-20)/100= 203.2 dollars, which is correct.

The third answer choice is also correct. This corresponds to taking the original price and subtracting from it the dollar amount off, which comes from multiplying the price by the percent off and dividing by 100. For our example, this would be computed as 254 - 254*20/100 = 203.2 dollars, which is correct.

The fourth answer multiplies the price by the quantity 100 minus the percent off divided by 100. For our example, this would compute the discounted price as 254*(100 - 20/100) = 25349.2 dollars, a number that’s nearly one hundred times the original price!

Therefore, only the second and third answer choices are correct.

The average score on this problem was 79%.

Fill in the blank in the code below so that

chronological is a DataFrame with the same rows as

sungod, but ordered chronologically by appearance on stage.

That is, earlier years should come before later years, and within a

single year, artists should appear in the DataFrame in the order they

appeared on stage at Sun God. Note that groupby

automatically sorts the index in ascending order.

chronological = sungod.groupby(___________).max().reset_index() ['Year', 'Artist', 'Appearance_Order']

['Year', 'Appearance_Order']

['Appearance_Order', 'Year']

None of the above.

Answer:

['Year', 'Appearance_Order']

The fact that groupby automatically sorts the index in

ascending order is important here. Since we want earlier years before

later years, we could group by 'Year', however if we

just group by year, all the artists who performed in a given

year will be aggregated together, which is not what we want. Within each

year, we want to organize the artists in ascending order of

'Appearance_Order'. In other words, we need to group by

'Year' with 'Appearance_Order' as subgroups.

Therefore, the correct way to reorder the rows of sungod as

desired is

sungod.groupby(['Year', 'Appearance_Order']).max().reset_index().

Note that we need to reset the index so that the resulting DataFrame has

'Year' and 'Appearance_Order' as columns, like

in sungod.

The average score on this problem was 85%.

Another DataFrame called music contains a row for every

music artist that has ever released a song. The columns are:

'Name' (str): the name of the music

artist'Genre' (str): the primary genre of the

artist'Top_Hit' (str): the most popular song by

that artist, based on sales, radio play, and streaming'Top_Hit_Year' (int): the year in which

the top hit song was releasedYou want to know how many musical genres have been represented at Sun

God since its inception in 1983. Which of the following expressions

produces a DataFrame called merged that could help

determine the answer?

merged = sungod.merge(music, left_on='Year', right_on='Top_Hit_Year')

merged = music.merge(sungod, left_on='Year', right_on='Top_Hit_Year')

merged = sungod.merge(music, left_on='Artist', right_on='Name')

merged = music.merge(sungod, left_on='Artist', right_on='Name')

Answer:

merged = sungod.merge(music, left_on='Artist', right_on='Name')

The question we want to answer is about Sun God music artists’

genres. In order to answer, we’ll need a DataFrame consisting of rows of

artists that have performed at Sun God since its inception in 1983. If

we merge the sungod DataFrame with the music

DataFrame based on the artist’s name, we’ll end up with a DataFrame

containing one row for each artist that has ever performed at Sun God.

Since the column containing artists’ names is called

'Artist' in sungod and 'Name' in

music, the correct syntax for this merge is

merged = sungod.merge(music, left_on='Artist', right_on='Name').

Note that we could also interchange the left DataFrame with the right

DataFrame, as swapping the roles of the two DataFrames in a merge only

changes the ordering of rows and columns in the output, not the data

itself. This can be written in code as

merged = music.merge(sungod, left_on='Name', right_on='Artist'),

but this is not one of the answer choices.

The average score on this problem was 86%.

In the next few parts, consider the following answer choices.

The name of the country with the most cities.

The name of the country with the fewest cities.

The number of cities in the country with the most cities.

The number of cities in the country with the fewest cities.

The last city, alphabetically, in the first country, alphabetically.

The first city, alphabetically, in the first country, alphabetically.

Nothing, because it errors.

What does the following expression evaluate to?

sun.groupby("Country").max().get("City").iloc[0]A

B

C

D

E

F

G

Answer: E. The last city, alphabetically, in the

first country, alphabetically.

Let’s break down the code:

sun.groupby("Country").max(): This line of code

groups the sun DataFrame by the "Country"

column and then determines the maximum for every other

column within each country group. Since the values in the

"City" column are stored as strings, and the maximum of a

Series of strings is the last string alphabetically, the values in the

"City" column of this DataFrame will contain the last city,

alphabetically, of each country.

.get("City"): .get("City") accesses the

"City" column.

.iloc[0]: Finally, .iloc[0] selects the

"City" value from the first row. The first row corresponds

to the first country alphabetically because groupby sorted

the DataFrame by "Country" in ascending order. The value in

the "City" column that .iloc[0] selects, then,

is the name of the last city, alphabetically, in the first country,

alphabetically.

The average score on this problem was 36%.

What does the following expression evaluate to?

sun.groupby("Country").sum().get("City").iloc[0]A

B

C

D

E

F

G

Answer: G. Nothing, because it errors.

Let’s break down the code:

sun.groupby("Country").sum(): This groups the

sun DataFrame by the "Country" column and

computes the sum for each numeric column within each country group.

Since "City" is non-numeric, it will be dropped.

.get("City"): This operation attempts to retrieve

the "City" column from the resulting DataFrame. However,

since the "City" column was dropped in the previous step,

this will raise a KeyError, indicating that the column is not present in

the DataFrame.

The average score on this problem was 73%.

What does the following expression evaluate to?

sun.groupby("Country").count().sort_values("Jan").index[-1]A

B

C

D

E

F

G

Answer: A. The name of the country with the most

cities.

Let’s break down the code:

sun.groupby("Country").count(): This groups the sun

DataFrame by the "Country" column. The

.count() method then returns the number of rows in each

group for each column. Since we’re grouping by "Country",

and since the rows in sun correspond to cities, this is

counting the number of cities in each country.

.sort_values("Jan"): The result of the previous

operation is a DataFrame with "Country" as the index and

the number of cities per country stored in every other column. The

"City, "Jan", "Feb",

"Mar", etc. columns in the resulting DataFrame all contain

the same information. Sorting by "Jan" sorts the DataFrame

by the number of cities each country has in ascending order.

.index[-1]: This retrieves the last index value from

the sorted DataFrame, which corresponds to the name of the country with

the most cities.

The average score on this problem was 61%.

What does the following expression evaluate to?

sun.groupby("Country").count().sort_values("City").get("City").iloc[-1]A

B

C

D

E

F

G

Answer: C. The number of cities in the country with

the most cities.

Let’s break down the code:

sun.groupby("Country").count(): This groups the sun

DataFrame by the "Country" column. The

.count() method then returns the number of rows in each

group for each column. Since we’re grouping by "Country",

and since the rows in sun correspond to cities, this is

counting the number of cities in each country.

.sort_values("City"): The result of the previous

operation is a DataFrame with "Country" as the index and

the number of "City"s per "Country" stored in

every other column. The "City, "Jan",

"Feb", "Mar", etc. columns in the resulting

DataFrame all contain the same information. Sorting by

"City" sorts the DataFrame by the number of cities each

country has in ascending order.

.get("City"): This retrieves the "City"

column from the sorted DataFrame, which contains the number of cities in

each country.

.iloc[-1]: This gets the last value from the

"City" column, which corresponds to the number of cities in

the country with the most cities.

The average score on this problem was 57%.

Vanessa is a big Formula 1 racing fan, and wants to plan a trip to Monaco, where the Monaco Grand Prix is held. Monaco is an example of a “city-state” — that is, a city that is its own country. Singapore is another example of a city-state.

We’ll say that a row of sun corresponds to a city-state

if its "Country" and "City" values are

equal.

Fill in the blanks so that the expression below is equal to the total

number of sunshine hours in October of all city-states in

sun.

sun[__(a)__].__(b)__What goes in blanks (a) and (b)?

Answer: (a):

sun.get("Country") == sun.get("City"), (b):

.get("Oct").sum()

What goes in blank (a)?

sun.get("Country") == sun.get("City")

This expression compares the "Country" column to the

"City" column for each row in the sun

DataFrame. It returns a Boolean Series where each value is

True if the corresponding "Country" and

"City" are the same (indicating a city-state) and

False otherwise.

The average score on this problem was 79%.

What goes in blank (b)?

.get("Oct").sum()

Here, we select the "Oct" column, which represents the

sunshine hours in October, and compute the sum of its values. By using

this after querying for city-states, we calculate the total sunshine

hours in October across all city-states in the sun

DataFrame.

The average score on this problem was 85%.

Complete the implementation of the function

most_sunshine, which takes in country, the

name of a country, and month, the name of a month

(e.g. "Apr"), and returns the name of the city (as a

string) in country with the most sunshine hours in

month, among the cities in sun. Assume there

are no ties.

def most_sunshine(country, month):

country_only = __(a)__

return country_only.__(b)__What goes in blanks (a) and (b)?

Answer: (a):

sun[sun.get("Country") == country], (b):

sort_values(month).get("City").iloc[-1] or

sort_values(month, ascending=False).get("City").iloc[0]

What goes in blank (a)?

sun[sun.get("Country") == country] To identify cities only

within the specified country, we need to query for the rows in the

sun DataFrame where the "Country" column

matches the given country. The expression

sun.get("Country") == country creates a Boolean Series,

where each entry is True if the corresponding row’s

"Country" column matches the provided country

and False otherwise. When this Boolean series is used to

index into sun DataFrame, it keeps only the rows for which

sun.get("Country") == country is True,

effectively giving us only the cities from the specified country.

The average score on this problem was 78%.

What goes in blank (b)?

sort_values(month).get("City").iloc[-1] or

sort_values(month, ascending=False).get("City").iloc[0]

To determine the city with the most sunshine hours in the specified

month, we sort the queried DataFrame (which only contains cities from

the specified country) based on the values in the month

column. There are two ways to achieve the desired result:

.iloc[-1] to get the last item after selecting the

"City" column with .get("City")..iloc[0] to get the

first item after selecting the "City" column with

.get("City").Both methods will give us the name of the city with the most sunshine hours in the specified month.

The average score on this problem was 52%.

In this part only, assume that all "City" names in

sun are unique.

Consider the DataFrame cities defined below.

cities = sun.groupby("City").mean().reset_index()Fill in the blanks so that the DataFrame that results from the

sequence of steps described below is identical to

cities.

“Sort sun by (c) in

(d) order (e).”

What goes in blank (c)?

"Country"

"City"

"Jan"

"Year"

What goes in blank (d)?

ascending

descending

What goes in blank (e)?

and drop the "Country" column

and drop the "Country" and "City"

columns

and reset the index

, drop the "Country" column, and reset the index

, drop the "Country" and "City" columns,

and reset the index

Nothing, leave blank (e) empty

Answer: (c): "City", (d): ascending,

(e): drop the "Country" column, and reset the index

Let’s start by understanding the code provided in the question:

The .groupby("City") method groups the data in the

sun DataFrame by unique city names. Since every city name

in the DataFrame is unique, this means that each group will consist of

just one row corresponding to that city.

After grouping by city, the .mean() method computes the

average of each column for each group. Again, as each city name is

unique, this operation doesn’t aggregate multiple rows but merely

reproduces the original values for each city. (For example, the value in

the "Jan" column for the row with the index

"Hamilton" will just be 229.8, which we see in the first

row of the preview of sun.)

Finally, .reset_index() is used to reset the DataFrame’s

index. When using .groupby, the column we group by (in this

case, "City") becomes the index. By resetting the index,

we’re making "City" a regular column again and setting the

index to 0, 1, 2, 3, …

What goes in blank (c)? "City"

When we group on "City", the index of the DataFrame is set

to "City" names, sorted in ascending alphabetical order

(this is always the behavior of groupby). Since all city

names are unique, the number of rows in

sun.groupby("City").mean() is the same as the number of

rows in sun, and so grouping on "City"

effectively sorts the DataFrame by "City" and sets the

index to "City". To replicate the order in

cities, then, we must sort sun by the

"City" column in ascending order.

The average score on this problem was 97%.

What goes in blank (d)? ascending

Addressed above.

The average score on this problem was 77%.

What goes in blank (e)? , drop the

"Country" column, and reset the index

In the provided code, after grouping by "City" and

computing the mean, we reset the index. This means the

"City" column is no longer the index but a regular column,

and the DataFrame gets a fresh integer index. To replicate this

structure, we need to reset the index in our sorted DataFrame.

Additionally, when we applied the .mean() method after

grouping, any non-numeric columns (like "Country") that we

can’t take the mean of are automatically excluded from the resulting

DataFrame. To match the structure of cities, then, we must

drop the "Country" column from our sorted DataFrame.

The average score on this problem was 46%.

True or False: In the code below, Z is guaranteed to

evaluate to True.

x = sun.groupby(["Country", "Year"]).mean().shape[0]

y = sun.groupby("Country").mean().shape[0]

z = (x >= y)True

False

Answer: True

Let’s us look at each line of code separately:

x = sun.groupby(["Country", "Year"]).mean().shape[0]:

This line groups the sun DataFrame by both

"Country" and "Year", then computes the mean.

As a result, each unique combination of "Country" and

"Year" will have its own row. For instance, if there are

three different values in the "Year" column for a

particular country, that country will appear three times in the

DataFrame sun.groupby(["Country", "Year"]).mean().

y = sun.groupby("Country").mean().shape[0]: When

grouping by "Country" alone, each unique country in the

sun DataFrame is represented by one row, independent of the

information in other columns.

z = (x >= y): This comparison checks whether the

number of rows produced by grouping by both "Country" and

"Year" (which is x) is greater than or equal

to the number of rows produced by grouping only by

"Country" (which is y).

Given our grouping logic:

If every country in the sun DataFrame has only a

single unique value in the "Year" column (e.g. if the

"Year" value for all ciites in the United States was always

3035.9, and if the "Year" value for all cities in Nigeria

was always 1845.4, etc.), then the number of rows when grouping by both

"Country" and "Year" will be equal to the

number of rows when grouping by "Country" alone. In this

scenario, x will be equal to y.

If at least one country in the sun DataFrame has at

least two different values in the "Year" column (e.g. if

there are at least two cities in the United States with different values

in the "Year" column), then there will be more rows when

grouping by both "Country" and "Year" compared

to grouping by "Country" alone. This means x

will be greater than y.

Considering the above scenarios, there’s no situation where the value

of x can be less than the value of y.

Therefore, z will always evaluate to True.

The average score on this problem was 70%.

In the next few parts, consider the following answer choices.

The name of the country with the most cities.

The name of the country with the fewest cities.

The number of cities in the country with the most cities.

The number of cities in the country with the fewest cities.

The last city, alphabetically, in the first country, alphabetically.

The first city, alphabetically, in the first country, alphabetically.

Nothing, because it errors.

What does the following expression evaluate to?

sun.groupby("Country").max().get("City").iloc[0]A

B

C

D

E

F

G

Answer: E. The last city, alphabetically, in the

first country, alphabetically.

Let’s break down the code:

sun.groupby("Country").max(): This line of code

groups the sun DataFrame by the "Country"

column and then determines the maximum for every other

column within each country group. Since the values in the

"City" column are stored as strings, and the maximum of a

Series of strings is the last string alphabetically, the values in the

"City" column of this DataFrame will contain the last city,

alphabetically, of each country.

.get("City"): .get("City") accesses the

"City" column.

.iloc[0]: Finally, .iloc[0] selects the

"City" value from the first row. The first row corresponds

to the first country alphabetically because groupby sorted

the DataFrame by "Country" in ascending order. The value in

the "City" column that .iloc[0] selects, then,

is the name of the last city, alphabetically, in the first country,

alphabetically.

The average score on this problem was 36%.

What does the following expression evaluate to?

sun.groupby("Country").sum().get("City").iloc[0]A

B

C

D

E

F

G

Answer: G. Nothing, because it errors.

Let’s break down the code:

sun.groupby("Country").sum(): This groups the

sun DataFrame by the "Country" column and

computes the sum for each numeric column within each country group.

Since "City" is non-numeric, it will be dropped.

.get("City"): This operation attempts to retrieve

the "City" column from the resulting DataFrame. However,

since the "City" column was dropped in the previous step,

this will raise a KeyError, indicating that the column is not present in

the DataFrame.

The average score on this problem was 73%.

What does the following expression evaluate to?

sun.groupby("Country").count().sort_values("Jan").index[-1]A

B

C

D

E

F

G

Answer: A. The name of the country with the most

cities.

Let’s break down the code:

sun.groupby("Country").count(): This groups the sun

DataFrame by the "Country" column. The

.count() method then returns the number of rows in each

group for each column. Since we’re grouping by "Country",

and since the rows in sun correspond to cities, this is

counting the number of cities in each country.

.sort_values("Jan"): The result of the previous

operation is a DataFrame with "Country" as the index and

the number of cities per country stored in every other column. The

"City, "Jan", "Feb",

"Mar", etc. columns in the resulting DataFrame all contain

the same information. Sorting by "Jan" sorts the DataFrame

by the number of cities each country has in ascending order.

.index[-1]: This retrieves the last index value from

the sorted DataFrame, which corresponds to the name of the country with

the most cities.

The average score on this problem was 61%.

What does the following expression evaluate to?

sun.groupby("Country").count().sort_values("City").get("City").iloc[-1]A

B

C

D

E

F

G

Answer: C. The number of cities in the country with

the most cities.

Let’s break down the code:

sun.groupby("Country").count(): This groups the sun

DataFrame by the "Country" column. The

.count() method then returns the number of rows in each

group for each column. Since we’re grouping by "Country",

and since the rows in sun correspond to cities, this is

counting the number of cities in each country.

.sort_values("City"): The result of the previous

operation is a DataFrame with "Country" as the index and

the number of "City"s per "Country" stored in

every other column. The "City, "Jan",

"Feb", "Mar", etc. columns in the resulting

DataFrame all contain the same information. Sorting by

"City" sorts the DataFrame by the number of cities each

country has in ascending order.

.get("City"): This retrieves the "City"

column from the sorted DataFrame, which contains the number of cities in

each country.

.iloc[-1]: This gets the last value from the

"City" column, which corresponds to the number of cities in

the country with the most cities.

The average score on this problem was 57%.

Vanessa is a big Formula 1 racing fan, and wants to plan a trip to Monaco, where the Monaco Grand Prix is held. Monaco is an example of a “city-state” — that is, a city that is its own country. Singapore is another example of a city-state.

We’ll say that a row of sun corresponds to a city-state

if its "Country" and "City" values are

equal.

Fill in the blanks so that the expression below is equal to the total

number of sunshine hours in October of all city-states in

sun.

sun[__(a)__].__(b)__What goes in blanks (a) and (b)?

Answer: (a):

sun.get("Country") == sun.get("City"), (b):

.get("Oct").sum()

What goes in blank (a)?

sun.get("Country") == sun.get("City")

This expression compares the "Country" column to the

"City" column for each row in the sun

DataFrame. It returns a Boolean Series where each value is

True if the corresponding "Country" and

"City" are the same (indicating a city-state) and

False otherwise.

The average score on this problem was 79%.

What goes in blank (b)?

.get("Oct").sum()

Here, we select the "Oct" column, which represents the

sunshine hours in October, and compute the sum of its values. By using

this after querying for city-states, we calculate the total sunshine

hours in October across all city-states in the sun

DataFrame.

The average score on this problem was 85%.

Fill in the blanks below so that the expression below is also equal

to the total number of sunshine hours in October of all city-states in

sun.

Note: What goes in blank (b) is the same as what goes in blank (b) above.

sun.get(["Country"]).merge(__(c)__).__(b)__What goes in blank (c)?

Answer:

sun, left_on="Country", right_on="City"

Let’s break down the code:

sun.get(["Country"]): This extracts just the

"Country" column from the sun DataFrame, as a

DataFrame. (It’s extracted as a DataFrame since we passed a list to

.get instead of a single string.)

.merge(sun, left_on="Country", right_on="City"):

Here, we’re using the .merge method to merge a version of

sun with just the "Country" column (which is

our left DataFrame) with the entire sun DataFrame

(which is our right DataFrame). The merge is done by matching

"Country"s from the left DataFrame with

"City"s from the right DataFrame. This way, rows in the

resulting DataFrame correspond to city-states, as it only contains

entries where a country’s name is the same as a city’s name.

.get("Oct").sum(): After merging, we use

.get("Oct") to retrieve the "Oct" column,

which represents the sunshine hours in October. Finally,

.sum() computes the total number of sunshine hours in

October for all the identified city-states.

The average score on this problem was 50%.

Teresa and Sophia are bored while waiting in line at Bistro and decide to start flipping a UCSD-themed coin, with a picture of King Triton’s face as the heads side and a picture of his mermaid-like tail as the tails side.

Teresa flips the coin 21 times and sees 13 heads and 8 tails. She

stores this information in a DataFrame named teresa that

has 21 rows and 2 columns, such that:

The "flips" column contains "Heads" 13

times and "Tails" 8 times.

The "Wolftown" column contains "Teresa"

21 times.

Then, Sophia flips the coin 11 times and sees 4 heads and 7 tails.

She stores this information in a DataFrame named sophia

that has 11 rows and 2 columns, such that:

The "flips" column contains "Heads" 4

times and "Tails" 7 times.

The "Makai" column contains "Sophia" 11

times.

How many rows are in the following DataFrame? Give your answer as an integer.

teresa.merge(sophia, on="flips")Hint: The answer is less than 200.

Answer: 108

Since we used the argument on="flips, rows from

teresa and sophia will be combined whenever

they have matching values in their "flips" columns.

For the teresa DataFrame:

"Heads" in the

"flips" column."Tails" in the

"flips" column.For the sophia DataFrame:

"Heads" in the

"flips" column."Tails" in the

"flips" column.The merged DataFrame will also only have the values

"Heads" and "Tails" in its

"flips" column. - The 13 "Heads" rows from

teresa will each pair with the 4 "Heads" rows

from sophia. This results in 13

\cdot 4 = 52 rows with "Heads" - The 8

"Tails" rows from teresa will each pair with

the 7 "Tails" rows from sophia. This results

in 8 \cdot 7 = 56 rows with

"Tails".

Then, the total number of rows in the merged DataFrame is 52 + 56 = 108.

The average score on this problem was 54%.

Let A be your answer to the previous part. Now, suppose that:

teresa contains an additional row, whose

"flips" value is "Total" and whose

"Wolftown" value is 21.

sophia contains an additional row, whose

"flips" value is "Total" and whose

"Makai" value is 11.

Suppose we again merge teresa and sophia on

the "flips" column. In terms of A, how many rows are in the new merged

DataFrame?

A

A+1

A+2

A+4

A+231

Answer: A+1

The additional row in each DataFrame has a unique

"flips" value of "Total". When we merge on the

"flips" column, this unique value will only create a single

new row in the merged DataFrame, as it pairs the "Total"

from teresa with the "Total" from

sophia. The rest of the rows are the same as in the

previous merge, and as such, they will contribute the same number of

rows, A, to the merged DataFrame. Thus,

the total number of rows in the new merged DataFrame will be A (from the original matching rows) plus 1

(from the new "Total" rows), which sums up to A+1.

The average score on this problem was 46%.

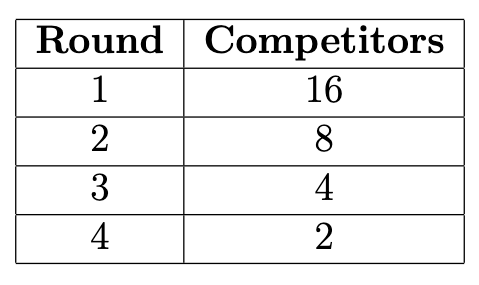

Michelle and Abel are each touring apartments for where they might

live next year. Michelle wants to be close to UCSD so she can attend

classes easily. Abel is graduating and wants to live close to the beach

so he can surf. Each person makes their own DataFrame (called

michelle and abel respectively), to keep track

of all the apartments that they toured. Both michelle and

abel came from querying apts, so both

DataFrames have the same columns and structure as apts.

Here are some details about the apartments they toured.

We’ll assume for this problem only that there is just one apartment

of each size available at each complex, so that if they both tour a one

bedroom apartment at the same complex, it is the exact same apartment

with the same "Apartment ID".

What does the following expression evaluate to?

michelle.merge(abel, left_index=True, right_index=True).shape[0]Answer: 8

This expression uses the indices of michelle and

abel to merge. Since both use the index of

"Apartment ID" and we are assuming that there is only one

apartment of each size available at each complex, we only need to see

how many unique apartments michelle and abel

share. Since there are 8 complexes that they both visited, only the one

bedroom apartments in these complexes will be displayed in the resulting

merged DataFrame. Therefore, we will only have 8 apartments, or 8

rows.

The average score on this problem was 48%.

What does the following expression evaluate to?

michelle.merge(abel, on=“Bed”).shape[0]

Answer: 240

This expression merges on the "Bed" column, so we need

to look at the data in this column for the two DataFrames. Within this

column, michelle and abel share only one

specific type of value: "One". With the details that are

given, michelle has 12 rows containing this value while

abel has 20 rows containing this value. Since we are

merging on this row, each row in abel that contains the

"One" value will be matched with a row in

michelle that also contains the value, meaning one row in

michelle will turn into twelve after the merge.

Thus, to compute the total number of rows from this merge expression,

we multiply the number of rows in michelle with the number

of rows in abel that fit the cross-criteria of

"Bed". Numerically, this would be 12 \cdot 20 = 240.

The average score on this problem was 33%.

What does the following expression evaluate to?

michelle.merge(abel, on=“Complex”).shape[0] Answer: 32

To approach this question, we first need to determine how many

complexes Michelle and Abel have in common: 8. We also know that each

complex was toured twice by both Michelle and Abel, so there are two

copies of each complex in the michelle and

abel DataFrames. Therefore, when we merge the DataFrames,

the two copies of each complex will match with each other, effectively

creating four copies for each complex from the original two. Since this

is done for each complex, we have 8 \cdot (2

\cdot 2) = 32.

The average score on this problem was 19%.

What does the following expression evaluate to?

abel.merge(abel, on=“Bed”).shape[0]

Answer: 800

Since this question deals purely with the abel

DataFrame, we need to fully understand what is inside it. There are 40

apartments (or rows): 20 one bedrooms and 20 two bedrooms. When we

self-merge on the "Bed" column, it is imperative to know

that every one bedroom apartment will be matched with the 20 other one

bedroom apartments (including itself)! This also goes for the two

bedroom apartments. Therefore, we have 20

\cdot 20 + 20 \cdot 20 = 800.

The average score on this problem was 28%.

We wish to compare the average rent for studio apartments in different complexes.

Our goal is to create a DataFrame studio_avg where each

complex with studio apartments appears once. The DataFrame should

include a column named "Rent" that contains the average

rent for all studio apartments in that complex. For each of the

following strategies, determine if the code provided works as intended,

gives an incorrect answer, or errors.

studio = apts[apts.get("Bed") == "Studio"]

studio_avg = studio.groupby("Complex").mean().reset_index()Works as intended

Gives an incorrect answer

Errors

studio_avg = apts.groupby("Complex").min().reset_index()Works as intended

Gives an incorrect answer

Errors

grouped = apts.groupby(["Bed", "Complex"]).mean().reset_index()

studio_avg = grouped[grouped.get("Bed") == "Studio"]Works as intended

Gives an incorrect answer

Errors

grouped = apts.groupby("Complex").mean().reset_index()

studio_avg = grouped[grouped.get("Bed") == "Studio"]Works as intended

Gives an incorrect answer

Errors

Answer:

studio is set to a DataFrame that is queried from the

apts DataFrame so that it contains only rows that have the

"Studio" value in "Bed". Then, with

studio, it groups by the "Complex" and

aggregates by the mean. Finally, it resets its index. Since we have a

DataFrame that only has "Studio"s , grouping by the

"Complex" will take the mean of every numerical column -

including the rent - in the DataFrame per "Complex",

effectively reaching our goal.

The average score on this problem was 96%.

studio_avg is created by grouping

"Complex" and aggregating by the minimum. However, as the

question asks for the average rent, getting the minimum

rent of every complex does not reach the conclusion the question asks

for.

The average score on this problem was 95%.

grouped is made through first grouping by both the

"Bed" and "Complex" columns then taking the

mean and resetting the index. Since we are grouping by both of these

columns, we separate each type of "Bed" by the

"Complex" it belongs to while aggregating by the mean for

every numerical column. After resetting the index, we are left with a

DataFrame that contains the mean of every "Bed" and

"Complex" combination. A sample of the DataFrame might look

like this:| Bed | Complex | Rent | … |

|---|---|---|---|

| One | Costa Verde Village | 3200 | … |

| One | Westwood | 3000 | … |

| … | … | … | … |

Then, when we assign studio_avg, we take this DataFrame

and only get the rows in which grouped’s "Bed"

column contains "Studio". As we already

.groupby()’d and aggregated by the mean for each

"Bed" and "Complex" pair, we arrive at the

solution the question requests for.

The average score on this problem was 84%.

grouped, we only .groupby() the

"Complex" column, aggregate by the mean, and reset index.

Then, we attempt to assign studio_avg to the resulting

DataFrame of a query from our grouped DataFrame. However,

this wouldn’t work at all because when we grouped by

"Complex" and aggregated by the mean to create

grouped, the .groupby() removed our

"Bed" column since it isn’t numerical. Therefore, when we

attempt to query by "Bed", babypandas cannot locate such

column since it was removed - resulting in an error.

The average score on this problem was 60%.

Consider the DataFrame alternate_approach defined as

follows

grouped = apts.groupby(["Bed", "Complex"]).mean().reset_index()

alternate_approach = grouped.groupby("Complex").min()Suppose that the "Rent" column of

alternate_approach has all the same values as the

"Rent" column of studio_avg, where

studio_avg is the DataFrame described in part (a). Which of

the following are valid conclusions about apts? Select all

that apply.

No complexes have studio apartments.

Every complex has at least one studio apartment.

Every complex has exactly one studio apartment.

Some complexes have only studio apartments.

In every complex, the average price of a studio apartment is less than or equal to the average price of a one bedroom apartment.

In every complex, the single cheapest apartment is a studio apartment.

None of these.

Answer: Options 2 and 5

alternate approach first groups by "Bed"

and "Complex" , takes the mean of all the columns, and

resets the index such that "Bed" and "Complex"

are no longer indexes. Now there is one row per "Bed" and

"Complex" combination that exists in apts and

all columns contain the mean value for each of these "Bed"

and "Complex" combinations. Then it groups by

"Complex" again, taking the minimum value of all columns.

The output is a DataFrame indexed by "Complex" where the

"Rent"column contains the minimum rent (from of all the

average prices for each type of "Bed").

"Rent" column in alternate_approachin contains

the minimum of all the average prices for each type of

"Bed" and the "Rent" column in

"studio_avg" contains the average rent for studios in each

type of complex. Even though they contain the same values, this does not

mean that no studios exist in any complexes. If this were the case,

studio_avg would be an empty DataFrame and

alternate_approach would not be.alternate_approach would not

appear in studio_avg.studio_avg has the average of

all these studios for each complex and alternate_approach

will have the minimum rent (from all the average prices for each type of

bedroom). Just because the columns are the same does not mean that there

is only one studio per complex.studio_avg contains the average

price for a studio in each complex. alternate_approach

contains the minimum rent from the average rents of all types of

bedrooms for each complex. Since these columns are the same, this means

that the average price of a studio must be lower (or equal to) the

average price of a one bedroom (or any other type of bedroom) for all