← return to practice.dsc10.com

Welcome! The problems shown below should be worked on on

paper, since the quizzes and exams you take in this course will

also be on paper. You do not need to submit your solutions anywhere.

We encourage you to complete this worksheet in groups during an

extra practice session on Friday, March 1st. Solutions will be posted

after all sessions have finished. This problem set is not designed to

take any particular amount of time - focus on understanding concepts,

not on getting through all the questions.

You survey 100 DSC majors and 140 CSE majors to ask them which video streaming service they use most. The resulting distributions are given in the table below. Note that each column sums to 1.

| Service | DSC Majors | CSE Majors |

|---|---|---|

| Netflix | 0.4 | 0.35 |

| Hulu | 0.25 | 0.2 |

| Disney+ | 0.1 | 0.1 |

| Amazon Prime Video | 0.15 | 0.3 |

| Other | 0.1 | 0.05 |

For example, 20% of CSE Majors said that Hulu is their most used video streaming service. Note that if a student doesn’t use video streaming services, their response is counted as Other.

What is the total variation distance (TVD) between the distribution for DSC majors and the distribution for CSE majors? Give your answer as an exact decimal.

Answer: 0.15

The average score on this problem was 89%.

Suppose we only break down video streaming services into four categories: Netflix, Hulu, Disney+, and Other (which now includes Amazon Prime Video). Now we recalculate the TVD between the two distributions. How does the TVD now compare to your answer to part (a)?

less than (a)

equal to (a)

greater than (a)

Answer: less than (a)

The average score on this problem was 93%.

Arya was curious how many UCSD students used Hulu over Thanksgiving break. He surveys 250 students and finds that 130 of them did use Hulu over break and 120 did not.

Using this data, Arya decides to test following hypotheses:

Null Hypothesis: Over Thanksgiving break, an equal number of UCSD students did use Hulu and did not use Hulu.

Alternative Hypothesis: Over Thanksgiving break, more UCSD students did use Hulu than did not use Hulu.

Which of the following could be used as a test statistic for the hypothesis test?

The proportion of students who did use Hulu minus the proportion of students who did not use Hulu.

The absolute value of the proportion of students who did use Hulu minus the proportion of students who did not use Hulu.

The proportion of students who did use Hulu plus the proportion of students who did not use Hulu.

The absolute value of the proportion of students who did use Hulu plus the proportion of students who did not use Hulu.

Answer: The proportion of students who did use Hulu minus the proportion of students who did not use Hulu.

The average score on this problem was 81%.

For the test statistic that you chose in part (a), what is the observed value of the statistic? Give your answer either as an exact decimal or a simplified fraction.

Answer: 0.04

The average score on this problem was 90%.

If the p-value of the hypothesis test is 0.053, what can we conclude, at the standard 0.05 significance level?

We reject the null hypothesis.

We fail to reject the null hypothesis.

We accept the null hypothesis.

Answer: We fail to reject the null hypothesis.

The average score on this problem was 87%.

In some cities, the number of sunshine hours per month is relatively consistent throughout the year. São Paulo, Brazil is one such city; in all months of the year, the number of sunshine hours per month is somewhere between 139 and 173. New York City’s, on the other hand, ranges from 139 to 268.

Gina and Abel, both San Diego natives, are interested in assessing how “consistent" the number of sunshine hours per month in San Diego appear to be. Specifically, they’d like to test the following hypotheses:

Null Hypothesis: The number of sunshine hours per month in San Diego is drawn from the uniform distribution, \left[\frac{1}{12}, \frac{1}{12}, ..., \frac{1}{12}\right]. (In other words, the number of sunshine hours per month in San Diego is equal in all 12 months of the year.)

Alternative Hypothesis: The number of sunshine hours per month in San Diego is not drawn from the uniform distribution.

As their test statistic, Gina and Abel choose the total variation distance. To simulate samples under the null, they will sample from a categorical distribution with 12 categories — January, February, and so on, through December — each of which have an equal probability of being chosen.

In order to run their hypothesis test, Gina and Abel need a way to calculate their test statistic. Below is an incomplete implementation of a function that computes the TVD between two arrays of length 12, each of which represent a categorical distribution.

def calculate_tvd(dist1, dist2):

return np.mean(np.abs(dist1 - dist2)) * ____Fill in the blank so that calculate_tvd works as

intended.

1 / 6

1 / 3

1 / 2

2

3

6

Answer: 6

The TVD is the sum of the absolute differences in proportions,

divided by 2. In the code to the left of the blank, we’ve computed the

mean of the absolute differences in proportions, which is the same as

the sum of the absolute differences in proportions, divided by 12 (since

len(dist1) is 12). To correct the fact that we

divided by 12, we multiply by 6, so that we’re only dividing by 2.

The average score on this problem was 17%.

Moving forward, assume that calculate_tvd works

correctly.

Now, complete the implementation of the function

uniform_test, which takes in an array

observed_counts of length 12 containing the number of

sunshine hours each month in a city and returns the p-value for the

hypothesis test stated at the start of the question.

def uniform_test(observed_counts):

# The values in observed_counts are counts, not proportions!

total_count = observed_counts.sum()

uniform_dist = __(b)__

tvds = np.array([])

for i in np.arange(10000):

simulated = __(c)__

tvd = calculate_tvd(simulated, __(d)__)

tvds = np.append(tvds, tvd)

return np.mean(tvds __(e)__ calculate_tvd(uniform_dist, __(f)__))What goes in blank (b)? (Hint: The function

np.ones(k) returns an array of length k in

which all elements are 1.)

Answer: np.ones(12) / 12

uniform_dist needs to be the same as the uniform

distribution provided in the null hypothesis, \left[\frac{1}{12}, \frac{1}{12}, ...,

\frac{1}{12}\right].

In code, this is an array of length 12 in which each element is equal

to 1 / 12. np.ones(12)

creates an array of length 12 in which each value is 1; for

each value to be 1 / 12, we divide np.ones(12)

by 12.

The average score on this problem was 66%.

What goes in blank (c)?

np.random.multinomial(12, uniform_dist)

np.random.multinomial(12, uniform_dist) / 12

np.random.multinomial(12, uniform_dist) / total_count

np.random.multinomial(total_count, uniform_dist)

np.random.multinomial(total_count, uniform_dist) / 12

np.random.multinomial(total_count, uniform_dist) / total_count

Answer:

np.random.multinomial(total_count, uniform_dist) / total_count

The idea here is to repeatedly generate an array of proportions that

results from distributing total_count hours across the 12

months in a way that each month is equally likely to be chosen. Each

time we generate such an array, we’ll determine its TVD from the uniform

distribution; doing this repeatedly gives us an empirical distribution

of the TVD under the assumption the null hypothesis is true.

The average score on this problem was 21%.

What goes in blank (d)?

Answer: uniform_dist

As mentioned above:

Each time we generate such an array, we’ll determine its TVD from the uniform distribution; doing this repeatedly gives us an empirical distribution of the TVD under the assumption the null hypothesis is true.

The average score on this problem was 54%.

What goes in blank (e)?

>

>=

<

<=

==

!=

Answer: >=

The purpose of the last line of code is to compute the p-value for the hypothesis test. Recall, the p-value of a hypothesis test is the proportion of simulated test statistics that are as or more extreme than the observed test statistic, under the assumption the null hypothesis is true. In this context, “as extreme or more extreme” means the simulated TVD is greater than or equal to the observed TVD (since larger TVDs mean “more different”).

The average score on this problem was 77%.

What goes in blank (f)?

Answer: observed_counts / total_count

or observed_counts / observed_counts.sum()

Blank (f) needs to contain the observed distribution of sunshine hours (as an array of proportions) that we compare against the uniform distribution to calculate the observed TVD. This observed TVD is then compared with the distribution of simulated TVDs to calculate the p-value. The observed counts are converted to proportions by dividing by the total count so that the observed distribution is on the same scale as the simulated and expected uniform distributions, which are also in proportions.

The average score on this problem was 27%.

Every year, the American Kennel Club holds a Photo Contest for dogs. Eric wants to know whether toy dogs win disproportionately more often than other kinds of dogs. He has collected a sample of 500 dogs that have won the Photo Contest. In his sample, 200 dogs were toy dogs.

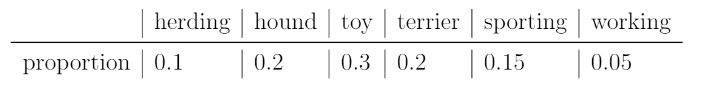

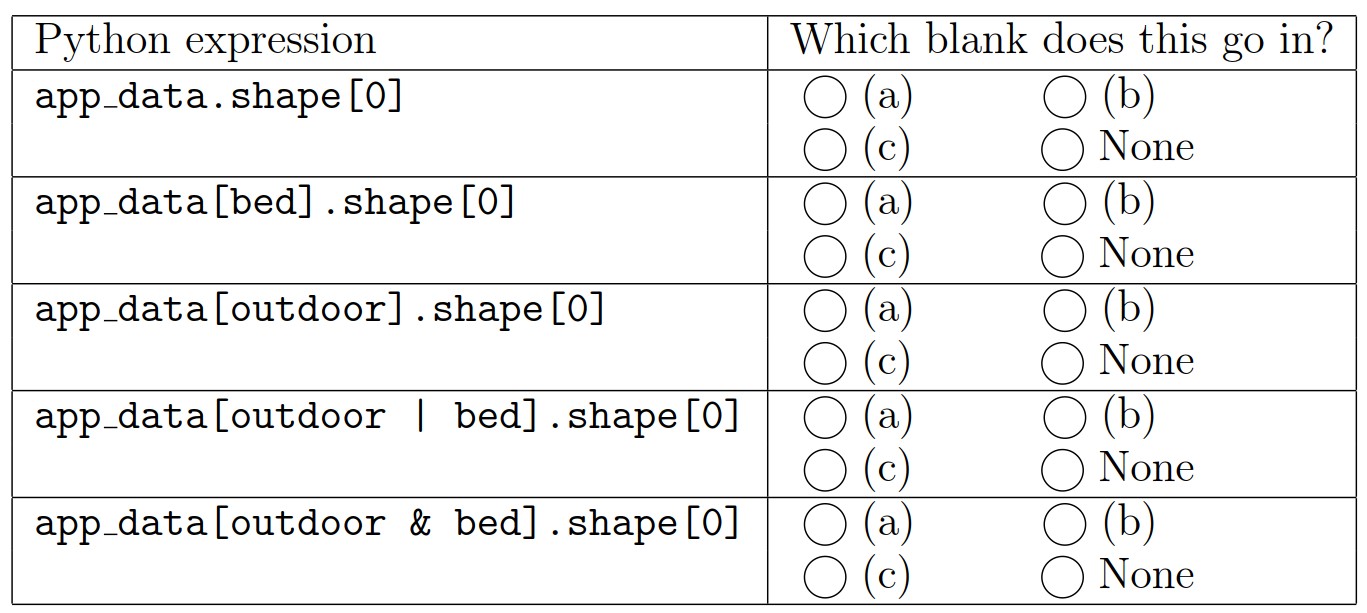

Eric also knows the distribution of dog kinds in the population:

Select all correct statements of the null hypothesis.

The distribution of dogs in the sample is the same as the distribution in the population. Any difference is due to chance.

Every dog in the sample was drawn uniformly at random without replacement from the population.

The number of toy dogs that win is the same as the number of toy dogs in the population.

The proportion of toy dogs that win is the same as the proportion of toy dogs in the population.

The proportion of toy dogs that win is 0.3.

The proportion of toy dogs that win is 0.5.

Answer: Options 4 & 5

A null hypothesis is the hypothesis that there is no significant difference between specified populations, any observed difference being due to sampling or experimental error. Let’s consider what a potential null hypothesis might look like. A potential null hypothesis would be that there is no difference between the win proportion of toy dogs compared to the proportion of toy dogs in the population.

Option 1: We’re not really looking at the distribution of dogs in our sample vs. dogs in our population, rather, we’re looking at whether toy dogs win more than other dogs. In other words, the only factors we’re really consdiering are the proportion of toy dogs to normal dogs, as well as the win percentages of toy dogs to normal dogs; and so the distribution of the population doesn’t really matter. Furthermore, this option makes no reference to win rate of toy dogs.

Option 2: This isn’t really even a null hypothesis, but rather more of a description of a test procedure. This option also makes no attempt to reference to win rate of toy dogs.

Option 3: This statement doesn’t really make sense in that it is illogical to compare the raw number of toy dogs wins to the number of toy dogs in the population, because the number of toy dogs is always at least the number of toy dogs that win.

Option 4: This statement is in line with the null hypothesis.

Option 5: This statement is another potential null hypothesis since the proportion of toy dogs in the population is 0.3.

Option 6: This statement, although similar to Option 5, would not be a null hypothesis because 0.5 has no relevance to any of the relevant proportions. While it’s true that if the proportion of of toy dogs that win is over 0.5, we could maybe infer that toy dogs win the majority of the times; however, the question is not to determine whether toy dogs win most of the times, but rather if toy dogs win a disproportionately high number of times relative to its population size.

The average score on this problem was 83%.

Select the correct statement of the alternative hypothesis.

The model in the null hypothesis underestimates how often toy dogs win.

The model in the null hypothesis overestimates how often toy dogs win.

The distribution of dog kinds in the sample is not the same as the population.

The data were not drawn at random from the population.

Answer: Option 1

The alternative hypothesis is the hypothesis we’re trying to support, which in this case is that toy dogs happen to win more than other dogs.

Option 1: This is in line with our alternative hypothesis, since proving that the null hypothesis underestimates how often toy dogs win means that toy dogs win more than other dogs.

Option 2: This is the opposite of what we’re trying to prove.

Option 3: We don’t really care too much about the distribution of dog kinds, since that doesn’t help us determine toy dog win rates compared to other dogs.

Option 4: Again, we don’t care whether all dogs are chosen according to the probabilities in the null model, instead we care specifically about toy dogs.

The average score on this problem was 67%.

Select all the test statistics that Eric can use to conduct his hypothesis.

The proportion of toy dogs in his sample.

The number of toy dogs in his sample.

The absolute difference of the sample proportion of toy dogs and 0.3.

The absolute difference of the sample proportion of toy dogs and 0.5.

The TVD between his sample and the population.

Answer: Option 1 and Option 2

Option 1: This option is correct. According to our null hypothesis, we’re trying to compare the proportion of toy dogs win rates to the proportion of toy dogs. Thus taking the proportion of toy dogs in Eric’s sample is a perfectly valid test statistic.

Option 2: This option is correct. Since the sample size is fixed at 500, so kowning the count is equivalent to knowing the proportion.

Option 3: This option is incorrect. The absolute difference of the sample proportion of toy dogs and 0.3 doesn’t help us because the absolute difference won’t tell us whether or not the sample proportion of toy dogs is lower than 0.3 or higher than 0.3.

Option 4: This option is incorrect for the same reasoning as above, but also 0.5 isn’t a relevant number anyways.

Option 5: This option is incorrect because TVD measures distance between two categorical distributions, and here we only care about one particular category (not all categories) being the same.

The average score on this problem was 70%.

Eric decides on this test statistic: the proportion of toy dogs minus the proportion of non-toy dogs. What is the observed value of the test statistic?

-0.4

-0.2

0

0.2

0.4

Answer: -0.2

For our given sample, the proportion of toy dogs is \frac{200}{500}=0.4 and the proportion of non-toy dogs is \frac{500-200}{500}=0.6, so 0.4 - 0.6 = -0.2.

The average score on this problem was 74%.

Which snippets of code correctly compute Eric’s test statistic on one

simulated sample under the null hypothesis? Select all that apply. The

result must be stored in the variable stat. Below are the 5

snippets

Snippet 1:

a = np.random.choice([0.3, 0.7])

b = np.random.choice([0.3, 0.7])

stat = a - bSnippet 2:

a = np.random.choice([0.1, 0.2, 0.3, 0.2, 0.15, 0.05])

stat = a - (1 - a)Snippet 3:

a = np.random.multinomial(500, [0.1, 0.2, 0.3, 0.2, 0.15, 0.05]) / 500

stat = a[2] - (1 - a[2])Snippet 4:

a = np.random.multinomial(500, [0.3, 0.7]) / 500

stat = a[0] - (1 - a[0])Snippet 5:

a = df.sample(500, replace=True)

b = a[a.get("kind") == "toy"].shape[0] / 500

stat = b - (1 - b)Snippet 1

Snippet 2

Snippet 3

Snippet 4

Snippet 5

Answer: Snippet 3 & Snippet 4

Snippet 1: This is incorrect because

np.random.choice() only chooses values that are either 0.3

or 0.7 which is simply just wrong.

Snippet 2: This is wrong because np.random.choice()

only chooses from the values within the list. From a sanity check it’s

not hard to realize that a should be able to take on more

values than the ones in the list.

Snippet 3: This option is correct. Recall, in

np.random.multinomial(n, [p_1, ..., p_k]), n

is the number of experiments, and [p_1, ..., p_k] is a

sequence of probability. The method returns an array of length k in

which each element contains the number of occurrences of an event, where

the probability of the ith event is p_i. In this snippet,

np.random.multinomial(500, [0.1, 0.2, 0.3, 0.2, 0.15, 0.05])

generates a array of length 6

(len([0.1, 0.2, 0.3, 0.2, 0.15, 0.05])) that contains the

number of occurrences of each kinds of dogs according to the given

distribution (the population distribution). We divide the first line by

500 to convert the number of counts in our resulting array into

proportions. To access the proportion of toy dogs in our sample, we take

the entry with the probability ditribution value of 0.3, which is the

third entry in the array or a[2]. To calculate our test

statistic we take the proportion of toy dogs minus the proportion of

non-toy dogs or a[2] - (1 - a[2])

Snippet 4: This option is correct. This approach is similar to the one above except we’re only considering the probability distribution of toy dogs vs non-toy dogs, which is what we wanted in the first place. The rest of the steps are similar to the ones above.

Snippet 5: Note that df is simple just a dataframe

containing information of the dogs, and may or may not reflect the

population distribution of dogs that participate in the photo

contest.

The average score on this problem was 72%.

After simulating, Eric has an array called sim that

stores his simulated test statistics, and a variable called

obs that stores his observed test statistic.

What should go in the blank to compute the p-value?

np.mean(sim _______ obs) <

<=

==

>=

>

Answer: Option 4: >=

Note that to calculate the p-value we look for test statistics that

are equal to the observed statistic or even further in the direction of

the alternative. In this case, if the proportion of the population of

toy dogs compared to the rest of the dog population was higher than

observed, we’d get a value larger than 0.2, and thus we use

>=.

The average score on this problem was 66%.

Eric’s p-value is 0.03. If his p-value cutoff is 0.01, what does he conclude?

He rejects the null in favor of the alternative.

He accepts the null.

He accepts the aleternative.

He fails to reject the null.

Answer: Option 4: He fails to reject the null

Option 1: Note that since our p-value was greater than 0.01, we fail to reject the null.

Option 2: We can never “accept” the null hypothesis.

Option 3: We didn’t accept the alternative since we failed to reject the null.

Option 4: This option is correct because our p-value was larger than our cutoff.

The average score on this problem was 86%.

For this question, let’s think of the data in app_data

as a random sample of all IKEA purchases and use it to test the

following hypotheses.

Null Hypothesis: IKEA sells an equal amount of beds

(category 'bed') and outdoor furniture (category

'outdoor').

Alternative Hypothesis: IKEA sells more beds than outdoor furniture.

The DataFrame app_data contains 5000 rows, which form

our sample. Of these 5000 products,

Which of the following could be used as the test statistic for this hypothesis test? Select all that apply.

Among 2500 beds and outdoor furniture items, the absolute difference between the proportion of beds and the proportion of outdoor furniture.

Among 2500 beds and outdoor furniture items, the proportion of beds.

Among 2500 beds and outdoor furniture items, the number of beds.

Among 2500 beds and outdoor furniture items, the number of beds plus the number of outdoor furniture items.

Answer: Among 2500 beds and outdoor furniture

items, the proportion of beds.

Among 2500 beds and outdoor

furniture items, the number of beds.

Our test statistic needs to be able to distinguish between the two hypotheses. The first option does not do this, because it includes an absolute value. If the absolute difference between the proportion of beds and the proportion of outdoor furniture were large, it could be because IKEA sells more beds than outdoor furniture, but it could also be because IKEA sells more outdoor furniture than beds.

The second option is a valid test statistic, because if the proportion of beds is large, that suggests that the alternative hypothesis may be true.

Similarly, the third option works because if the number of beds (out of 2500) is large, that suggests that the alternative hypothesis may be true.

The fourth option is invalid because out of 2500 beds and outdoor furniture items, the number of beds plus the number of outdoor furniture items is always 2500. So the value of this statistic is constant regardless of whether the alternative hypothesis is true, which means it does not help you distinguish between the two hypotheses.

The average score on this problem was 78%.

Let’s do a hypothesis test with the following test statistic: among 2500 beds and outdoor furniture items, the proportion of outdoor furniture minus the proportion of beds.

Complete the code below to calculate the observed value of the test

statistic and save the result as obs_diff.

outdoor = (app_data.get('category')=='outdoor')

bed = (app_data.get('category')=='bed')

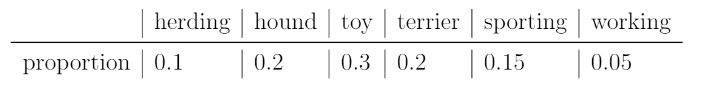

obs_diff = ( ___(a)___ - ___(b)___ ) / ___(c)___The table below contains several Python expressions. Choose the correct expression to fill in each of the three blanks. Three expressions will be used, and two will be unused.

Answer: Reading the table from top to bottom, the five expressions should be used in the following blanks: None, (b), (a), (c), None.

The correct way to define obs_diff is

outdoor = (app_data.get('category')=='outdoor')

bed = (app_data.get('category')=='bed')

obs_diff = (app_data[outdoor].shape[0] - app_data[bed].shape[0]) / app_data[outdoor | bed].shape[0]The first provided line of code defines a boolean Series called

outdoor with a value of True corresponding to

each outdoor furniture item in app_data. Using this as the

condition in a query results in a DataFrame of outdoor furniture items,

and using .shape[0] on this DataFrame gives the number of

outdoor furniture items. So app_data[outdoor].shape[0]

represents the number of outdoor furniture items in

app_data. Similarly, app_data[bed].shape[0]

represents the number of beds in app_data. Likewise,

app_data[outdoor | bed].shape[0] represents the total

number of outdoor furniture items and beds in app_data.

Notice that we need to use an or condition (|) to

get a DataFrame that contains both outdoor furniture and beds.

We are told that the test statistic should be the proportion of outdoor furniture minus the proportion of beds. Translating this directly into code, this means the test statistic should be calculated as

obs_diff = app_data[outdoor].shape[0]/app_data[outdoor | bed].shape[0] - app_data[bed].shape[0]) / app_data[outdoor | bed].shape[0]Since this is a difference of two fractions with the same denominator, we can equivalently subtract the numerators first, then divide by the common denominator, using the mathematical fact \frac{a}{c} - \frac{b}{c} = \frac{a-b}{c}.

This yields the answer

obs_diff = (app_data[outdoor].shape[0] - app_data[bed].shape[0]) / app_data[outdoor | bed].shape[0]Notice that this is the observed value of the test statistic

because it’s based on the real-life data in the app_data

DataFrame, not simulated data.

The average score on this problem was 90%.

Which of the following is a valid way to generate one value of the test statistic according to the null model? Select all that apply.

Way 1:

multi = np.random.multinomial(2500, [0.5,0.5])

(multi[0] - multi[1])/2500Way 2:

outdoor = np.random.multinomial(2500, [0.5,0.5])[0]/2500

bed = np.random.multinomial(2500, [0.5,0.5])[1]/2500

outdoor - bed Way 3:

choice = np.random.choice([0, 1], 2500, replace=True)

choice_sum = choice.sum()

(choice_sum - (2500 - choice_sum))/2500Way 4:

choice = np.random.choice(['bed', 'outdoor'], 2500, replace=True)

bed = np.count_nonzero(choice=='bed')

outdoor = np.count_nonzero(choice=='outdoor')

outdoor/2500 - bed/2500Way 5:

outdoor = (app_data.get('category')=='outdoor')

bed = (app_data.get('category')=='bed')

samp = app_data[outdoor|bed].sample(2500, replace=True)

samp[samp.get('category')=='outdoor'].shape[0]/2500 - samp[samp.get('category')=='bed'].shape[0]/2500)Way 6:

outdoor = (app_data.get('category')=='outdoor')

bed = (app_data.get('category')=='bed')

samp = (app_data[outdoor|bed].groupby('category').count().reset_index().sample(2500, replace=True))

samp[samp.get('category')=='outdoor'].shape[0]/2500 - samp[samp.get('category')=='bed'].shape[0]/2500Way 1

Way 2

Way 3

Way 4

Way 5

Way 6

Answer: Way 1, Way 3, Way 4, Way 6

Let’s consider each way in order.

Way 1 is a correct solution. This code begins by defining a variable

multi which will evaluate to an array with two elements

representing the number of items in each of the two categories, after

2500 items are drawn randomly from the two categories, with each

category being equally likely. In this case, our categories are beds and

outdoor furniture, and the null hypothesis says that each category is

equally likely, so this describes our scenario accurately. We can

interpret multi[0] as the number of outdoor furniture items

and multi[1] as the number of beds when we draw 2500 of

these items with equal probability. Using the same mathematical fact

from the solution to Problem 8.2, we can calculate the difference in

proportions as the difference in number divided by the total, so it is

correct to calculate the test statistic as

(multi[0] - multi[1])/2500.

Way 2 is an incorrect solution. Way 2 is based on a similar idea as

Way 1, except it calls np.random.multinomial twice, which

corresponds to two separate random processes of selecting 2500 items,

each of which is equally likely to be a bed or an outdoor furniture

item. However, is not guaranteed that the number of outdoor furniture

items in the first random selection plus the number of beds in the

second random selection totals 2500. Way 2 calculates the proportion of

outdoor furniture items in one random selection minus the proportion of

beds in another. What we want to do instead is calculate the difference

between the proportion of outdoor furniture and beds in a single random

draw.

Way 3 is a correct solution. Way 3 does the random selection of items

in a different way, using np.random.choice. Way 3 creates a

variable called choice which is an array of 2500 values.

Each value is chosen from the list [0,1] with each of the

two list elements being equally likely to be chosen. Of course, since we

are choosing 2500 items from a list of size 2, we must allow

replacements. We can interpret the elements of choice by

thinking of each 1 as an outdoor furniture item and each 0 as a bed. By

doing so, this random selection process matches up with the assumptions

of the null hypothesis. Then the sum of the elements of

choice represents the total number of outdoor furniture

items, which the code saves as the variable choice_sum.

Since there are 2500 beds and outdoor furniture items in total,

2500 - choice_sum represents the total number of beds.

Therefore, the test statistic here is correctly calculated as the number

of outdoor furniture items minus the number of beds, all divided by the

total number of items, which is 2500.

Way 4 is a correct solution. Way 4 is similar to Way 3, except

instead of using 0s and 1s, it uses the strings 'bed' and

'outdoor' in the choice array, so the

interpretation is even more direct. Another difference is the way the

number of beds and number of outdoor furniture items is calculated. It

uses np.count_nonzero instead of sum, which wouldn’t make

sense with strings. This solution calculates the proportion of outdoor

furniture minus the proportion of beds directly.

Way 5 is an incorrect solution. As described in the solution to

Problem 8.2, app_data[outdoor|bed] is a DataFrame

containing just the outdoor furniture items and the beds from

app_data. Based on the given information, we know

app_data[outdoor|bed] has 2500 rows, 1000 of which

correspond to beds and 1500 of which correspond to furniture items. This

code defines a variable samp that comes from sampling this

DataFrame 2500 times with replacement. This means that each row of

samp is equally likely to be any of the 2500 rows of

app_data[outdoor|bed]. The fraction of these rows that are

beds is 1000/2500 = 2/5 and the

fraction of these rows that are outdoor furniture items is 1500/2500 = 3/5. This means the random

process of selecting rows randomly such that each row is equally likely

does not make each item equally likely to be a bed or outdoor furniture

item. Therefore, this approach does not align with the assumptions of

the null hypothesis.

Way 6 is a correct solution. Way 6 essentially modifies Way 5 to make

beds and outdoor furniture items equally likely to be selected in the

random sample. As in Way 5, the code involves the DataFrame

app_data[outdoor|bed] which contains 1000 beds and 1500

outdoor furniture items. Then this DataFrame is grouped by

'category' which results in a DataFrame indexed by

'category', which will have only two rows, since there are

only two values of 'category', either

'outdoor' or 'bed'. The aggregation function

.count() is irrelevant here. When the index is reset,

'category' becomes a column. Now, randomly sampling from

this two-row grouped DataFrame such that each row is equally likely to

be selected does correspond to choosing items such that each

item is equally likely to be a bed or outdoor furniture item. The last

line simply calculates the proportion of outdoor furniture items minus

the proportion of beds in our random sample drawn according to the null

model.

The average score on this problem was 59%.

Suppose we generate 10,000 simulated values of the test statistic

according to the null model and store them in an array called

simulated_diffs. Complete the code below to calculate the

p-value for the hypothesis test.

np.count_nonzero(simulated_diffs _________ obs_diff)/10000What goes in the blank?

<

<=

>

>=

Answer: <=

To answer this question, we need to know whether small values or large values of the test statistic indicate the alternative hypothesis. The alternative hypothesis is that IKEA sells more beds than outdoor furniture. Since we’re calculating the proportion of outdoor furniture minus the proportion of beds, this difference will be small (negative) if the alternative hypothesis is true. Larger (positive) values of the test statistic mean that IKEA sells more outdoor furniture than beds. A value near 0 means they sell beds and outdoor furniture equally.

The p-value is defined as the proportion of simulated test statistics

that are equal to the observed value or more extreme, where extreme

means in the direction of the alternative. In this case, since small

values of the test statistic indicate the alternative hypothesis, the

correct answer is <=.

The average score on this problem was 43%.

At the San Diego Model Railroad Museum, there are different admission prices for children, adults, and seniors. Over a period of time, as tickets are sold, employees keep track of how many of each type of ticket are sold. These ticket counts (in the order child, adult, senior) are stored as follows.

admissions_data = np.array([550, 1550, 400])Complete the code below so that it creates an array

admissions_proportions with the proportions of tickets sold

to each group (in the order child, adult, senior).

def as_proportion(data):

return __(a)__

admissions_proportions = as_proportion(admissions_data)What goes in blank (a)?

Answer: data/data.sum()

To calculate proportion for each group, we divide each value in the array (tickets sold to each group) by the sum of all values (total tickets sold). Remember values in an array can be processed as a whole.

The average score on this problem was 95%.

The museum employees have a model in mind for the proportions in which they sell tickets to children, adults, and seniors. This model is stored as follows.

model = np.array([0.25, 0.6, 0.15])We want to conduct a hypothesis test to determine whether the admissions data we have is consistent with this model. Which of the following is the null hypothesis for this test?

Child, adult, and senior tickets might plausibly be purchased in proportions 0.25, 0.6, and 0.15.

Child, adult, and senior tickets are purchased in proportions 0.25, 0.6, and 0.15.

Child, adult, and senior tickets might plausibly be purchased in proportions other than 0.25, 0.6, and 0.15.

Child, adult, and senior tickets, are purchased in proportions other than 0.25, 0.6, and 0.15.

Answer: Child, adult, and senior tickets are purchased in proportions 0.25, 0.6, and 0.15. (Option 2)

Recall, null hypothesis is the hypothesis that there is no significant difference between specified populations, any observed difference being due to sampling or experimental error. So, we assume the distribution is the same as the model.

The average score on this problem was 88%.

Which of the following test statistics could we use to test our hypotheses? Select all that could work.

sum of differences in proportions

sum of squared differences in proportions

mean of differences in proportions

mean of squared differences in proportions

none of the above

Answer: sum of squared differences in proportions, mean of squared differences in proportions (Option 2, 4)

We need to use squared difference to avoid the case that large positive and negative difference cancel out in the process of calculating sum or mean, resulting in small sum of difference or mean of difference that does not reflect the actual deviation. So, we eliminate Option 1 and 3.

The average score on this problem was 77%.

Below, we’ll perform the hypothesis test with a different test statistic, the mean of the absolute differences in proportions.

Recall that the ticket counts we observed for children, adults, and

seniors are stored in the array

admissions_data = np.array([550, 1550, 400]), and that our

model is model = np.array([0.25, 0.6, 0.15]).

For our hypothesis test to determine whether the admissions data is

consistent with our model, what is the observed value of the test

statistic? Give your answer as a number between 0 and 1, rounded to

three decimal places. (Suppose that the value you calculated is assigned

to the variable observed_stat, which you will use in later

questions.)

Answer: 0.02

We first calculate the proportion for each value in

admissions_data \frac{550}{550+1550+400} = 0.22 \frac{1550}{550+1550+400} = 0.62 \frac{400}{550+1550+400} = 0.16 So, we have

the distribution of the admissions_data

Then, we calculate the observed value of the test statistic (the mean of the absolute differences in proportions) \frac{|0.22-0.25|+|0.62-0.6|+|0.16-0.15|}{number\ of\ goups} =\frac{0.03+0.02+0.01}{3} = 0.02

The average score on this problem was 82%.

Now, we want to simulate the test statistic 10,000 times under the

assumptions of the null hypothesis. Fill in the blanks below to complete

this simulation and calculate the p-value for our hypothesis test.

Assume that the variables admissions_data,

admissions_proportions, model, and

observed_stat are already defined as specified earlier in

the question.

simulated_stats = np.array([])

for i in np.arange(10000):

simulated_proportions = as_proportions(np.random.multinomial(__(a)__, __(b)__))

simulated_stat = __(c)__

simulated_stats = np.append(simulated_stats, simulated_stat)

p_value = __(d)__What goes in blank (a)? What goes in blank (b)? What goes in blank (c)? What goes in blank (d)?

Answer: (a) admissions_data.sum() (b)

model (c)

np.abs(simulated_proportions - model).mean() (d)

np.count_nonzero(simulated_stats >= observed_stat) / 10000

Recall, in np.random.multinomial(n, [p_1, ..., p_k]),

n is the number of experiments, and

[p_1, ..., p_k] is a sequence of probability. The method

returns an array of length k in which each element contains the number

of occurrences of an event, where the probability of the ith event is

p_i.

We want our simulated_proportion to have the same data

size as admissions_data, so we use

admissions_data.sum() in (a).

Since our null hypothesis is based on model, we simulate

based on distribution in model, so we have

model in (b).

In (c), we compute the mean of the absolute differences in

proportions. np.abs(simulated_proportions - model) gives us

a series of absolute differences, and .mean() computes the

mean of the absolute differences.

In (d), we calculate the p_value. Recall, the

p_value is the chance, under the null hypothesis, that the

test statistic is equal to the value that was observed in the data or is

even further in the direction of the alternative.

np.count_nonzero(simulated_stats >= observed_stat) gives

us the number of simulated_stats greater than or equal to

the observed_stat in the 10000 times simulations, so we

need to divide it by 10000 to compute the proportion of

simulated_stats greater than or equal to the

observed_stat, and this gives us the

p_value.

The average score on this problem was 79%.

True or False: the p-value represents the probability that the null hypothesis is true.

True

False

Answer: False

Recall, the p-value is the chance, under the null hypothesis, that the test statistic is equal to the value that was observed in the data or is even further in the direction of the alternative. It only gives us the strength of evidence in favor of the null hypothesis, which is different from “the probability that the null hypothesis is true”.

The average score on this problem was 64%.

The new statistic that we used for this hypothesis test, the mean of

the absolute differences in proportions, is in fact closely related to

the total variation distance. Given two arrays of length three,

array_1 and array_2, suppose we compute the

mean of the absolute differences in proportions between

array_1 and array_2 and store the result as

madp. What value would we have to multiply

madp by to obtain the total variation distance

array_1 and array_2? Give your answer as a

number rounded to three decimal places.

Answer: 1.5

Recall, the total variation distance (TVD) is the sum of the absolute differences in proportions, divided by 2. When we compute the mean of the absolute differences in proportions, we are computing the sum of the absolute differences in proportions, divided by the number of groups (which is 3). Thus, to get TVD, we first multiply our current statistics (the mean of the absolute differences in proportions) by 3, we get the sum of the absolute differences in proportions. Then according to the definition of TVD, we divide this value by 2. Thus, we have \text{current statistics}\cdot 3 / 2 = \text{current statistics}\cdot 1.5.

The average score on this problem was 65%.